[Day 105] Cisco ISE Mastery Training: Multi-Data Center Deployments

Table of Contents

Introduction

When organizations scale beyond a single data center, identity and access control becomes one of the hardest challenges in enterprise security. Cisco ISE, by design, is built to provide centralized policy, consistent security enforcement, and seamless redundancy across globally distributed networks.

In a multi-data center deployment, the stakes are higher:

- You’re not only protecting one campus or HQ, but entire regional hubs, remote branches, and cloud-integrated workloads.

- Authentication must remain fast, synchronized, and resilient, even if WAN links fail or a whole data center goes offline.

- Policies must be enforced consistently across tens of thousands of endpoints, multiple PSNs, and geographically redundant PAN/MNT nodes.

- This training module is not about high-level theory—it’s about giving you a step-by-step technical blueprint for architecting, deploying, and validating ISE in multi-data center designs. You’ll learn how to:

- Build geographically separated ISE nodes with sync between primary and secondary PAN/MNT.

- Place PSNs close to users for optimal performance.

- Implement load balancing with F5/Citrix ADC for seamless failover.

- Run CLI & GUI validations to confirm resiliency across regions.

- Design DR (Disaster Recovery) drills specific to multi-site deployments.

By the end of this session, you won’t just “know” multi-data center ISE—you’ll have a workbook playbook to architect, test, and present enterprise-class ISE deployments to customers, managers, or in your CCIE Security lab exam.

Problem Statement

- Single-site fragility: A PAN/MnT outage or PSN overload in one DC can ripple globally.

- Latency & trust: Cross-DC latency, DNS/GSLB, and PKI/FQDN mismatches often break portals and pxGrid.

- Operational complexity: Many NADs, many RADIUS paths, multiple AD sites—difficult to steer traffic correctly.

- Compliance: Need evidence that wired/wireless/VPN continue at target RTO/RPO, with accounting continuity.

Goal: Architect and validate an ISE fabric that serves locally, fails regionally, and manages globally.

Solution Overview

- Personas:

- PAN: Active in DC-A, Standby in DC-B (one active admin at a time).

- MnT: Primary in DC-A, Secondary in DC-B (logging continuity).

- PSN: Pools in each DC; clients/NADs prefer local PSNs.

- Traffic steering: Load balancer VIPs per DC + GSLB/DNS for geo-proximity and failover.

- Trust: Consistent PKI chains and FQDN strategy (VIP CN/SAN) across sites.

- Dependencies: AD Sites, NTP, DNS, routing, CoA reachability modeled per region.

- Backups: Central secure repo; config restore to PAN, ops restore to MnT.

Design guardrails (verify for your ISE version): Aim for stable, low-loss, low-latency links between nodes; ensure adequate bandwidth for replication; strict time sync (NTP); packet loss ≪1%.

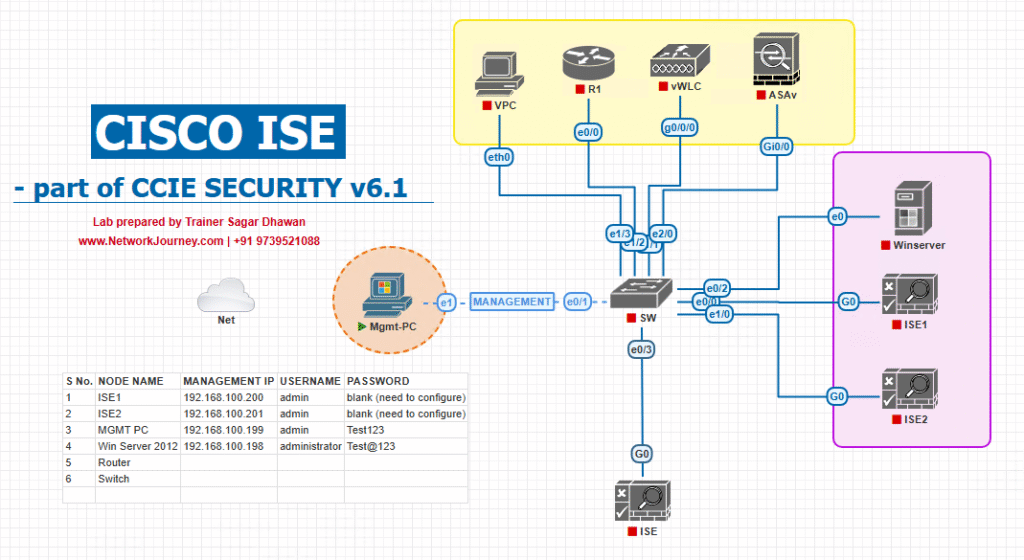

Sample Lab Topology (VMware/EVE-NG + NADs/WLC/Endpoints)

Sites

- DC-A (Primary):

PAN-A (Active),MNT-A (Primary),PSN-A1/A2,LB-A,GSLB,AD-A,PKI-A,NTP/DNS-A - DC-B (Secondary):

PAN-B (Standby),MNT-B (Secondary),PSN-B1/B2,LB-B,GSLB,AD-B,PKI-B,NTP/DNS-B

Access

- Switches: Catalyst 9300 (dot1x/MAB)

- Wireless: 9800-CL (CWA/BYOD)

- VPN: ASA/FTD (AAA to ISE)

- Endpoints: Win11 (EAP-TLS), iOS (Guest/BYOD), IoT (MAB)

Topology Diagram:

Step-by-Step GUI Configuration Guide (with CLI Validation)

Phase 0 — Pre-Build Design Worksheet (fill before you start)

Checklist

- FQDN plan for Admin, Portal, RADIUS VIPs per DC (e.g.,

ise-admin.corp,portal.corp,radius-a.corp,radius-b.corp) - PKI: Root/intermediate chains; cert SANs include VIPs & hostnames

- GSLB/DNS: Health checks for 1812/1813/443; short TTL (e.g., 30–60 s)

- AD Sites: DCs prefer local domain controllers

- NTP/DNS reachability per node

- CoA path from PSN subnets to NADs allowed (UDP/3799)

- Backup repository (SFTP) + encryption key

Phase 1 — Build DC-A (Active Site)

1.1 Deploy PAN-A, MNT-A, PSN-A1/A2 (VMware/EVE-NG)

CLI (each node):

show version show application status ise show ntp ; show clock detail show dns

Expected: All services running, time/DNS OK.

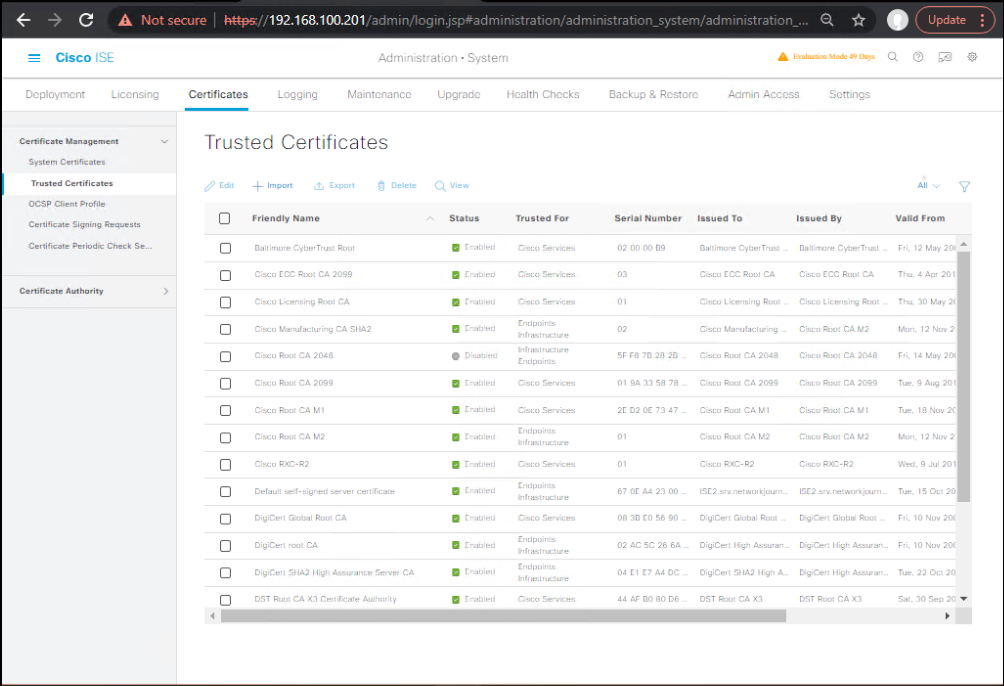

1.2 Establish Certificates & Trust

GUI: Administration → System → Certificates → Trusted Certificates

- Import root + intermediates (mark for EAP/Portal trust as required).

GUI:System Certificates - Install Admin cert on PAN-A; EAP/Portal on PSN-A1/A2 (or terminate TLS at LB).

CLI Validation (PSN):

openssl s_client -connect <vip-a:443> -servername portal.corp </dev/null 2>/dev/null | openssl x509 -noout -subject -issuer

Subject/CN/SAN correct; chain complete.

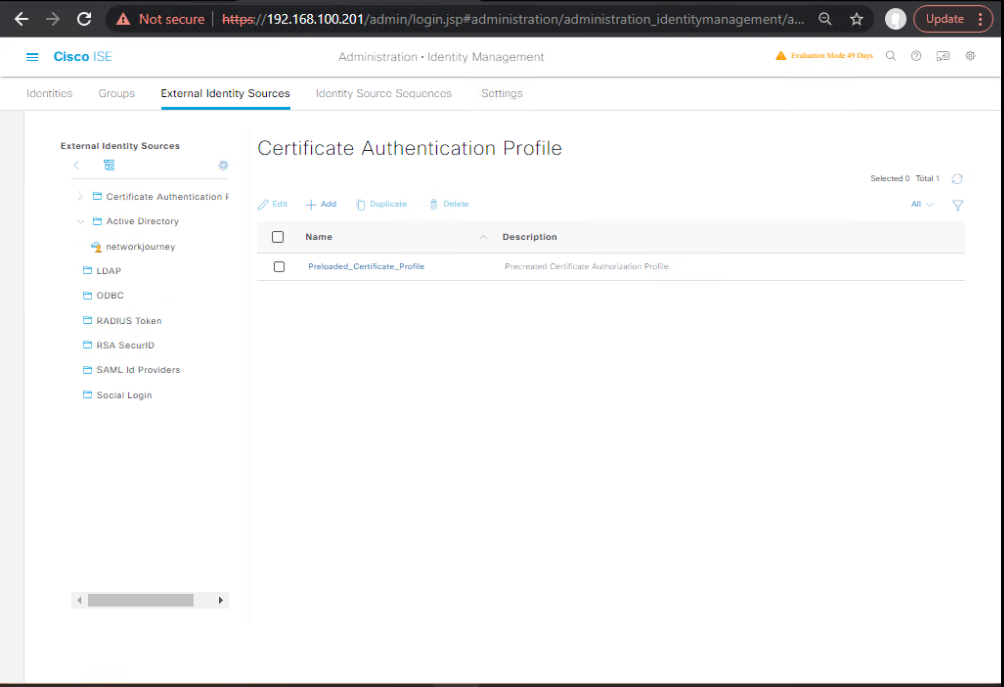

1.3 Join AD & Validate

GUI: Administration → Identity Management → External Identity Sources → Active Directory

- Join domain with service account; map to local AD site.

CLI Tail:

show logging application ise-identity.log tail

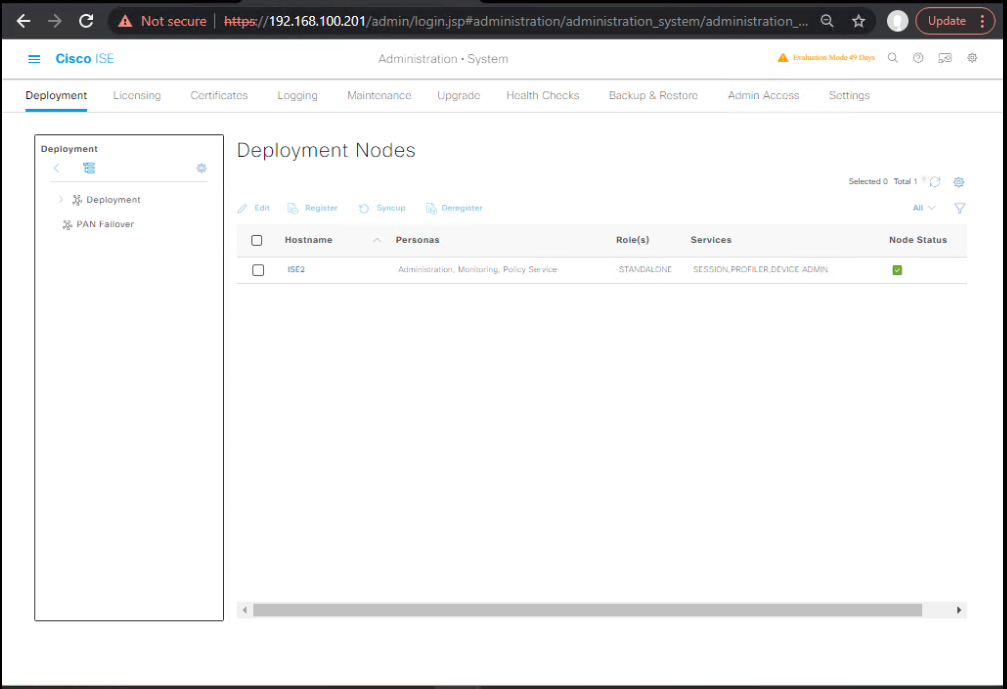

1.4 Register Personas (if installing as base)

GUI: Administration → System → Deployment

- Ensure PSN-A1/A2 are Policy Service; MNT-A Monitoring Primary.

Phase 2 — Add DC-B (Standby Site)

2.1 Deploy PAN-B, MNT-B, PSN-B1/B2

CLI:

show version show ntp ; show dns

2.2 Register PAN-B as Standby Admin

GUI (PAN-A): Administration → System → Deployment → Register Node

- Add PAN-B → Admin persona → Standby.

CLI (PAN-B):

show application status ise show replication status

Replication: In Sync.

2.3 Register MNT-B as Secondary MnT

GUI (PAN-A): Register MNT-B → Monitoring Secondary.

GUI Validation (PAN-A): Deployment → Synchronization Status = Up to Date.

2.4 Register PSN-B1/B2

GUI (PAN-A): Register both as Policy Service.

Certificates: Install EAP/Portal certs (or trust chain if LB terminates TLS).

CLI (each PSN-B):

show application status ise show logging application ise-psc.log tail

Phase 3 — Load Balancers & GSLB/DNS

3.1 Configure LB Pools/VIPs (per DC)

- VIP-A (DC-A): RADIUS 1812/1813, HTTPS 443 → pool(PSN-A1/A2)

- VIP-B (DC-B): RADIUS 1812/1813, HTTPS 443 → pool(PSN-B1/B2)

- Monitors: RADIUS (Access-Accept), HTTPS 200/portal login page

- Persistence: Source-IP for RADIUS; Cookie/SSLID for HTTPS

F5 (sample)

tmsh show ltm pool tmsh show ltm virtual

Citrix ADC (sample)

show lb vserver show serviceGroup

3.2 GSLB/DNS

- portal.corp → geo → VIP-A or VIP-B

- radius.corp (optional) → geo → VIP-A or VIP-B

- Short TTL (30–60 s) for quick failover; health check both sites.

Validation

nslookup portal.corp nslookup radius.corp

Returns closest healthy VIP per region.

Phase 4 — NAD (Switch/WLC/VPN) Integration

4.1 Switch (Catalyst) – RADIUS + CoA

Config (example)

aaa new-model radius server ISE-A address ipv4 <VIP-A> auth-port 1812 acct-port 1813 key <SHARED_SECRET> radius server ISE-B address ipv4 <VIP-B> auth-port 1812 acct-port 1813 key <SHARED_SECRET> aaa group server radius ISE-GRP server name ISE-A server name ISE-B aaa authentication dot1x default group ISE-GRP aaa authorization network default group ISE-GRP aaa accounting dot1x default start-stop group ISE-GRP ip device tracking radius-server vsa send accounting radius-server attribute 8 include-in-access-req aaa server radius dynamic-author client <PSN-A-SUBNET> server-key <KEY> client <PSN-B-SUBNET> server-key <KEY>

Validation

test aaa group radius <user> <pass> legacy show radius statistics

4.2 WLC 9800 – RADIUS + CWA Redirect

CLI

radius server ise-a address ipv4 <VIP-A> auth-port 1812 acct-port 1813 key <KEY> radius server ise-b address ipv4 <VIP-B> auth-port 1812 acct-port 1813 key <KEY> aaa authentication dot1x default group radius aaa accounting dot1x default start-stop group radius show radius summary

4.3 VPN (ASA/FTD)

aaa-server ISE-GRP protocol radius aaa-server ISE-GRP host <VIP-A> key <KEY> aaa-server ISE-GRP host <VIP-B> key <KEY> test aaa-server authentication ISE-GRP host <VIP-A> username u password p

Phase 5 — Policy Sets & Location Steering

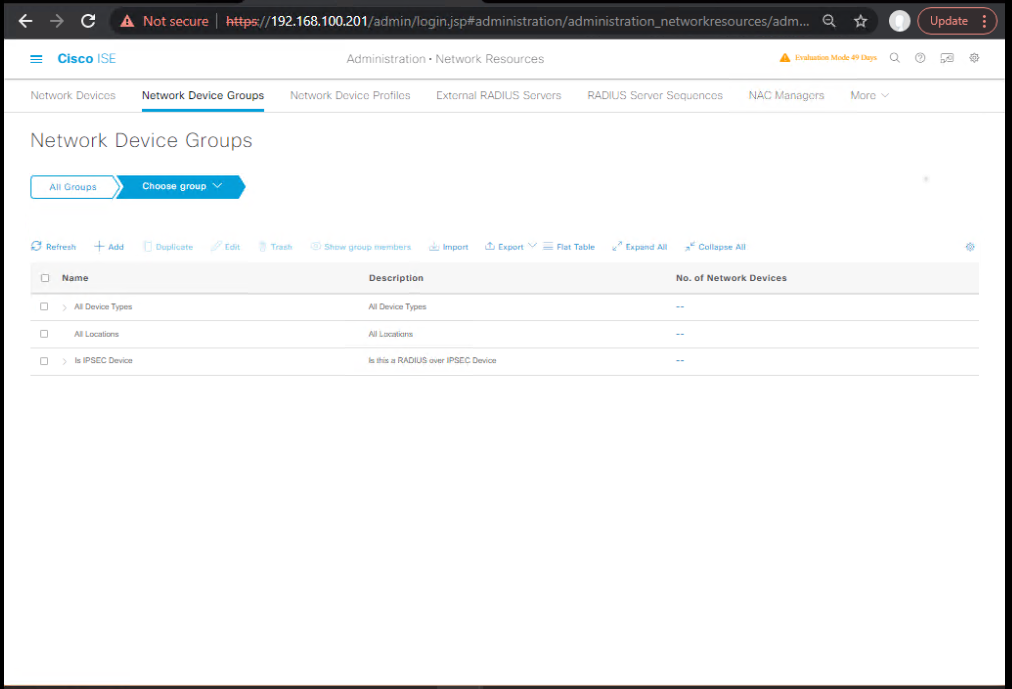

5.1 NDGs & Conditions

GUI: Administration → Network Resources → Network Device Groups

- Create Location = DC-A, DC-B; tag NADs accordingly.

5.2 Policy Sets

GUI: Policy → Policy Sets

- Create policy set per region/NAD group (e.g.,

Region-A-Policy,Region-B-Policy). - Inside, keep common AuthC (EAP-TLS/PEAP) and site-specific AuthZ (DACL/SGT).

Validation: Simulate authentications from a NAD in each region; verify correct rule hit.

GUI: Operations → RADIUS → Live Logs (Result/Policy Set).

Phase 6 — Operational Validation (End-to-End)

6.1 Wired EAP-TLS (Region-A)

- Connect client → ensure local PSN-A served.

Switch CLI

show authentication sessions interface Gi1/0/10 details show radius statistics

ISE GUI: Live Logs → PSN-A node; Access-Accept.

6.2 Wireless CWA (Region-B)

- Join SSID; redirect to

https://portal.corp

Expect: GSLB → VIP-B; no cert warnings.

WLC CLI

show client detail <mac> show radius summary

ISE GUI: Live Logs → PSN-B; Guest success.

6.3 VPN AAA (Any Region)

ASA/FTD CLI

test aaa-server authentication ISE-GRP host <VIP-B> username u password p

ISE GUI: Accept with device = VPN NAD.

6.4 CoA & DACL

- Trigger policy to upgrade VLAN/DACL post-auth.

Switch CLI

show ip access-lists <dacl-name> show authentication sessions interface Gi1/0/10

CoA worked (Reauth seen in logs).

6.5 Accounting Integrity

ISE GUI: Accounting → check Start/Interim/Stop continuity; MnT-A primary receiving; MnT-B in sync.

Phase 7 — Resilience Tests (Document Evidence)

7.1 PSN Failure (Region-A)

- Action:

application stop iseon PSN-A1 - Expect: LB VIP-A drains to PSN-A2; authentications unaffected.

ISE GUI: Live Logs show node = PSN-A2.

F5/Citrix: pool member down.

7.2 DC-A Isolation (RADIUS only)

- Action: Blackhole VIP-A.

- Expect: NADs use VIP-B backup; GSLB steers portal.corp to DC-B.

NAD CLI:

show radius statistics

Retries to VIP-A fail; success on VIP-B.

7.3 Admin Failover

- Action: Stop PAN-A ISE app.

- Expect: PAN-B becomes Active; policies writable.

GUI:Deploymentshows PAN-B Active.

CLI (PAN-B):

show application status ise show replication status

7.4 MnT Continuity

- Action: Stop MNT-A; verify logs accessible on MNT-B.

GUI: Reports available; Live Logs continue.

Expert-Level Use Cases

- Global Active/Active PSN with GSLB Health-Steered RADIUS

- NADs use FQDN

radius.corp; GSLB returns DC-local VIP; health probes remove unhealthy DC; ensures proximity without touching NAD configs.

- NADs use FQDN

- Dual-Terminated Portals (LB Offload + PSN TLS)

- LB terminates TLS for guest; PSN also holds portal cert for seamless fallback. Blue/Green cert rotation with SAN overlap enables instant cert swaps.

- Hybrid Cloud DR (Azure/AWS) for PSN-Only Burst

- Keep PAN/MnT on-prem; pre-baked PSN AMIs scale out in cloud during peak or outage; auto-register to PAN via API; attach to cloud LB.

- Per-Region DACL/SGT with Central Governance

- Common AuthC/ posture globally; AuthZ injects regional DACL/SGT; NDG-based policy sets prevent cross-region drift.

- pxGrid Ecosystem Split-Brain Prevention

- pxGrid primary anchored to DC-A; consumers in both DCs trust the same CA; use client cert pinning and verify session directory convergence after failovers.

- Accounting Fan-Out + SIEM Offload

- MnT-A primary; syslog stream to SIEM from both MnTs. During DC fail, SIEM remains source of truth; reduces pressure on ops restore.

- Automated NAD RADIUS Flip with Guardrails

- Ansible job pushes NAD server-group re-order during DC outage; runs pre/post health (

show radius statistics, auth tests) and auto-rollback on error budget breach.

- Ansible job pushes NAD server-group re-order during DC outage; runs pre/post health (

- Per-Region CoA Assurance Matrix

- Nightly synthetic CoA from each PSN subnet to sampled NADs; store pass/fail in Grafana; alerts on path breaks (ACL/regression).

- Staged Maintenance Windows with Zero Impact

- Drain PSN pool on LB; upgrade PSNs per DC; keep the other DC live; test with synthetic auths; rotate DCs—no global outage.

- Portal CDN + Edge Caching

- For high-latency geographies, offload static portal assets (css/js) to CDN; keep dynamic flows on local PSNs; reduces TLS handshake time and portal TTFB.

Quick Validation Commands

ISE

show application status ise show replication status show logging application ise-psc.log tail show logging application ise-identity.log tail show ntp ; show dns ; show clock detail

Catalyst

test aaa group radius ISE-GRP user pass legacy show authentication sessions interface Gi1/0/10 details show radius statistics

WLC 9800

show radius summary show client detail <mac>

F5

tmsh show ltm pool tmsh show ltm virtual

Citrix ADC

show lb vserver show serviceGroup

DNS

nslookup portal.corp nslookup radius.corp

OpenSSL (portal chain)

openssl s_client -connect portal.corp:443 -servername portal.corp </dev/null | openssl x509 -noout -subject -issuer

FAQs – Multi-Data Center Deployments in Cisco ISE

Q1. How do I decide which ISE nodes to place in each data center?

Answer:

- PAN/MNT: Always deploy Primary PAN/MNT in DC1 and Secondary PAN/MNT in DC2.

- PSNs: Place PSNs close to users (e.g., one pair in each DC, plus regional hubs).

- Design tip: Never span PAN HA across more than two data centers—it increases replication latency.

- CLI Validation:

show application status ise show replication status - GUI Validation:

Q2. How does replication between PAN/MNT nodes work in multi-site deployments?

Answer:

Replication uses TCP 7800 and requires low latency (< 200ms RTT).

- Replication is one-to-one, from primary PAN to secondary.

- You must manually promote the secondary PAN during a failover.

- CLI Validation:

show replication status show logging system ade/ADE.log | include replication

Q3. Do I need a load balancer (F5/Citrix ADC) for PSNs in multi-DC setups?

Answer:

Yes, strongly recommended:

- Ensures session stickiness for RADIUS/TACACS.

- Simplifies WLC/switch configurations (point to VIP instead of individual PSNs).

- Provides transparent failover if one PSN or DC goes down.

- GUI Validation

Q4. What’s the best practice for RADIUS timeouts in multi-DC?

Answer:

- Increase switch/WLC RADIUS timeout to 5–7 seconds (instead of default 2s).

- Configure multiple RADIUS servers (local DC first, remote DC as backup).

- CLI Example on switch:

radius-server timeout 7 radius-server retransmit 3 aaa group server radius ISE-GROUP server 10.1.1.10 server 10.2.1.10

Q5. How do I test failover between data centers?

Answer:

- Shut down Primary PAN in DC1.

- Promote Secondary PAN in DC2 (via GUI or CLI).

application configure ise - Validate policy push and authentication still works.

- Shut down one PSN in DC1—verify authentication requests are routed to DC2 PSN.

- GUI Validation:

Q6. Can PAN/MNT be active/active across DCs?

Answer:

No. PAN/MNT is active/passive only.

- Only one PAN is “active” at a time.

- MNT is also active/passive—logs are stored only in the active MNT.

- Workaround: Use syslog forwarding from each ISE node to a central SIEM (Splunk, QRadar).

Q7. What are the latency and bandwidth requirements for multi-DC ISE?

Answer:

- Latency: < 200 ms RTT for PAN ↔ PSN and PAN ↔ PAN.

- Bandwidth: At least 10 Mbps dedicated for replication traffic in large environments.

- Use

pingandiperfbetween DC nodes to validate.

Q8. How do I ensure session persistence if one DC fails?

Answer:

- Use F5/Citrix ADC with session stickiness.

- Configure same Node Group IDs for PSNs across DCs (for CoA messages).

- Enable RADIUS persistence at the load balancer level.

Q9. How do I backup and restore ISE in a multi-DC setup?

Answer:

- Backup only from the Primary PAN.

- Store backups in remote repository (FTP/SFTP).

- Restore must be done in the same version, and PAN role assignment must be reconfigured.

- CLI Example:

backup ise-DC1-repo repository SFTP-Repo restore ise-DC1-repo repository SFTP-Repo

Q10. What are common mistakes engineers make in multi-DC ISE deployments?

Answer:

- Not adjusting RADIUS timeouts → causes false authentication failures.

- Placing PSNs only in one DC → users in other DCs face high latency.

- Forgetting to configure secondary PAN → no HA in case of DC failure.

- Misconfigured load balancer (no RADIUS persistence).

- Skipping failover drills → deployment breaks in real incidents.

YouTube Link

For more in-depth Cisco ISE Mastery Training, subscribe to my YouTube channel Network Journey and join my instructor-led classes for hands-on, real-world ISE experience

Closing Notes

- Treat multi-DC as a traffic-engineering problem (RADIUS + HTTPS + CoA), not just “more nodes.”

- Localize PSNs, globalize admin/logging, and harmonize PKI + DNS/GSLB.

- Validate with scripted failovers and retain an evidence pack for audits.

Upgrade Your Skills – Start Today

For more in-depth Cisco ISE Mastery Training, subscribe to Network Journey on YouTube and join my instructor-led classes.

Fast-Track to Cisco ISE Mastery Pro (4-Month Live Program)

You’ll master: Multi-DC design/validation, HA/DR, advanced policy sets, Guest/BYOD at scale, pxGrid/SGT, load-balancing/GSLB, upgrades/migrations, TAC-style troubleshooting, automation runbooks.

Course outline & enrollment: https://course.networkjourney.com/ccie-security/

Next steps: Book a discovery call, get the Multi-DC Runbook Pack (templates, scripts), and secure your seat.

Enroll Now & Future‑Proof Your Career

Email: info@networkjourney.com

WhatsApp / Call: +91 97395 21088

![[Day 70] Cisco ISE Mastery Training: Wireless NAC End-to-End Lab Validation](https://networkjourney.com/wp-content/uploads/2025/08/Day-70-Cisco-ISE-Mastery-Training-Wireless-NAC-End-to-End-Lab-Validation.png)