[Day #50 PyATS Series] Automated Configuration Backup (Multi-Vendor) Using pyATS for Cisco [Python for Network Engineer]

Table of Contents

Introduction — key points

Configuration backups are the single most important preventative control in network operations: when a device fails, when a change breaks production, when auditors ask for history. Manual backups are fragile and slow. This masterclass shows you how to automate backups across Cisco and other vendors (Arista, Juniper, Fortinet) using pyATS, turning raw CLI into structured, versioned artifacts.

What you’ll build in this Article (practical outcomes):

- A repeatable pyATS job that collects running / startup configs from many devices.

- Storage strategy: timestamped files, Git repository for versioning, optional encrypted archive, and object storage (S3) integration.

- Validation: CLI verification that configs were retrieved, and GUI validation via a simple web dashboard (Elasticsearch/Kibana or a minimal Flask page).

- Change detection: diffing new configs vs last backup, generating drift reports, and escalating alerts when unexpected changes occur.

- Operational best practices: retention, rotation, credential handling, concurrency, and failover.

This is a hands-on masterclass Article — we go beyond theory into scripts you can run, CLI outputs to expect, and GUI validation flows.

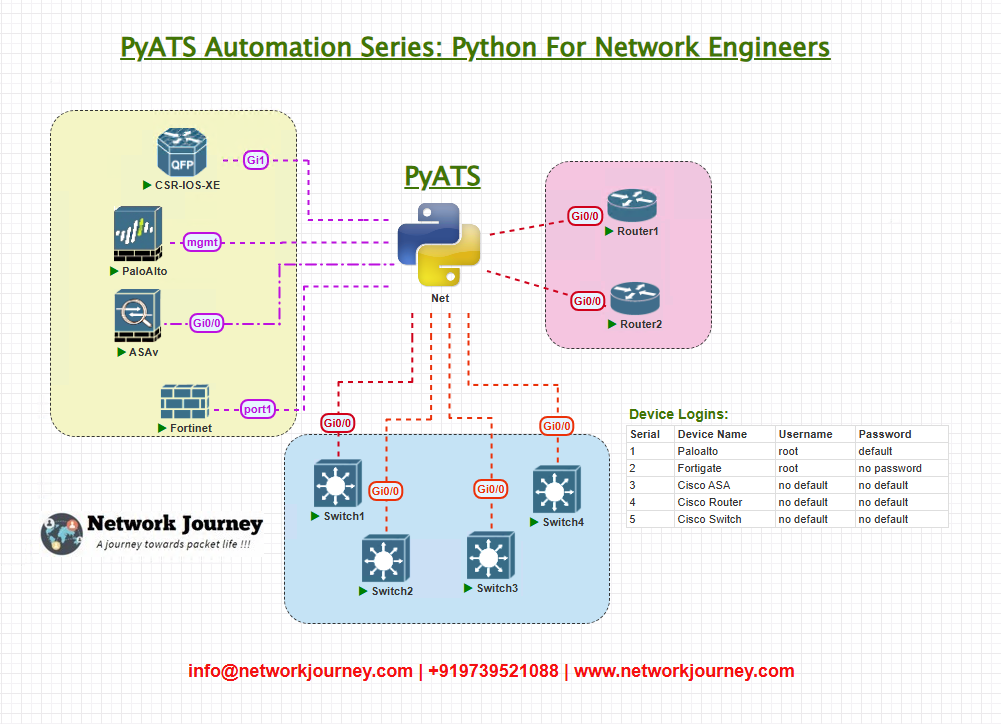

Topology Overview

You need a small but realistic automation lab to test this:

- Management VLAN reachable from AutomationHost.

- Syslog/Elasticsearch optional for GUI validation.

- Git server or local bare repo for version control of backups.

Topology & Communications

What we will communicate with devices:

- SSH sessions initiated by pyATS (Genie uses paramiko/Netmiko under the hood).

- Commands collected (vendor-specific where required):

- Cisco IOS-XE:

show running-config,show startup-config(if available) - Cisco NX-OS:

show running-config - Arista EOS:

show running-config - Junos:

show configuration | display set(orshow configurationraw) - FortiGate:

show full-configurationorshow config system global(vendor specifics)

- Cisco IOS-XE:

- Collect metadata: hostname, version, serial, timestamp for naming.

Storage & flow:

- pyATS collects config → saves to

backups/<device>/<YYYYMMDD_HHMMSS>_running.cfg - Run normalization (strip timestamps, secrets masked) →

normalized/<device>/<hash>.cfg - Diff normalized config vs latest in Git → if changed, commit to Git with message

- Push commit to remote Git (optional) and archive to S3 (optional)

- Store event into Elasticsearch for GUI dashboards (optional)

Validation flows:

- CLI: verify file exists, file checksum matches output,

show archiveor remotels. - GUI: Kibana dashboard or simple Flask app lists last successful backup per device, change status (OK/CHANGED), last diff summary.

Security notes:

- Never store plain text credentials in repository.

- Use vaults or environment variables; consider

pyatscredential store or system secrets manager. - Mask sensitive lines (passwords, community strings) during normalization.

Workflow Script — full pyATS backup & versioning job

Below is a production-ready but self-contained script to collect configurations and commit changes to a Git repo. Save as pyats_backup.py.

Pre-requisites: pyATS and Genie installed (

pip install pyats genie-contrib),gitCLI available, a local bare git repo initialized (e.g.,/srv/net-backups.git), and proper SSH keys or credentials to push.

#!/usr/bin/env python3

"""

pyats_backup.py

Collect running-config from devices in testbed.yml, normalize, diff with last commit,

commit changes to local git repo, and optionally push to remote / S3.

"""

import os, subprocess, json, hashlib, time

from datetime import datetime

from pathlib import Path

from genie.testbed import load

# CONFIG

TESTBED = 'testbed.yml'

BACKUP_BASE = Path('backups')

NORMALIZED_BASE = Path('normalized')

GIT_REPO = Path('net-backups') # local git working copy (non-bare)

MASK_PATTERNS = [

r'(username\s+\S+\s+password\s+)\S+',

r'(secret\s+\S+\s+)\S+',

r'(password\s+)\S+',

r'(community\s+)\S+'

]

GIT_COMMIT_USER = "automation <automation@example.com>"

S3_UPLOAD = False

S3_BUCKET = "s3://my-net-backups" # optional

def ensure_dirs(device):

d_backup = BACKUP_BASE / device

d_norm = NORMALIZED_BASE / device

d_backup.mkdir(parents=True, exist_ok=True)

d_norm.mkdir(parents=True, exist_ok=True)

return d_backup, d_norm

def mask_secrets(text):

import re

for p in MASK_PATTERNS:

text = re.sub(p, r'\1<redacted>', text, flags=re.IGNORECASE)

return text

def normalize_config(raw_cfg):

"""

Normalize configuration:

- remove timestamps

- mask secrets

- remove lines that change often (like counters)

"""

lines = []

for line in raw_cfg.splitlines():

# ignore lines with timestamps of logging

if line.strip().startswith('!') and ('Generated' in line or 'uptime' in line):

continue

# mask secrets

lines.append(line)

cfg = "\n".join(lines)

cfg = mask_secrets(cfg)

# optionally canonicalize ordering for certain sections (not implemented fully here)

return cfg

def fingerprint(text):

return hashlib.sha1(text.encode('utf-8')).hexdigest()

def git_commit(device, file_path, message):

# stage file and commit

cwd = GIT_REPO

rel = os.path.relpath(file_path, cwd)

subprocess.run(['git', 'add', rel], cwd=cwd, check=True)

env = os.environ.copy()

env['GIT_COMMITTER_NAME'] = 'Automation'

env['GIT_COMMITTER_EMAIL'] = 'automation@example.com'

subprocess.run(['git', '-c', f'user.name={GIT_COMMIT_USER.split()[0]}',

'-c', f'user.email={GIT_COMMIT_USER.split()[1]}',

'commit', '-m', message], cwd=cwd, check=True, env=env)

def push_to_s3(file_path):

if not S3_UPLOAD:

return

subprocess.run(['aws', 's3', 'cp', str(file_path), S3_BUCKET], check=True)

def main():

tb = load(TESTBED)

devices = tb.devices.values()

summary = {}

for dev in devices:

name = dev.name

print(f"[{datetime.utcnow().isoformat()}] Backing up {name}")

d_b, d_n = ensure_dirs(name)

ts = datetime.utcnow().strftime('%Y%m%d_%H%M%S')

rawfile = d_b / f"{ts}_running.cfg"

try:

dev.connect(log_stdout=False)

dev.execute('terminal length 0')

# device-specific show running-config

# Genie parse could be used but we want raw text

raw_cfg = dev.execute('show running-config') # vendor-specific adjustments may be needed

dev.disconnect()

except Exception as e:

print("Failed to connect or collect:", e)

summary[name] = {'status': 'ERROR', 'error': str(e)}

continue

with open(rawfile, 'w') as f:

f.write(raw_cfg)

# Normalize & mask

norm = normalize_config(raw_cfg)

fingerprint_new = fingerprint(norm)

normfile = d_n / f"{fingerprint_new}.cfg"

if normfile.exists():

print(f"No change for {name} (fingerprint {fingerprint_new})")

summary[name] = {'status': 'UNCHANGED', 'fingerprint': fingerprint_new}

# optionally still copy for timestamped archive

else:

with open(normfile, 'w') as f:

f.write(norm)

# copy normfile into git repo path e.g., net-backups/<device>/<fingerprint>.cfg

dest_dir = GIT_REPO / name

dest_dir.mkdir(parents=True, exist_ok=True)

dest_path = dest_dir / f"{fingerprint_new}.cfg"

with open(dest_path, 'w') as f:

f.write(norm)

# commit to git

commit_msg = f"Backup {name} at {ts}, fingerprint {fingerprint_new}"

try:

git_commit(name, dest_path, commit_msg)

print(f"Committed new backup for {name}")

push_to_s3(dest_path)

summary[name] = {'status': 'CHANGED', 'fingerprint': fingerprint_new}

except subprocess.CalledProcessError as e:

print("Git commit failed:", e)

summary[name] = {'status': 'GIT_FAILED', 'error': str(e)}

# Save run summary

with open('backup_summary.json', 'w') as f:

json.dump({'ts': datetime.utcnow().isoformat(), 'summary': summary}, f, indent=2)

if __name__ == '__main__':

main()

Notes & extensions you should implement:

- Use vendor-specific commands where

show running-configis different (JunOS needsshow configuration | display set). - For NX-OS,

show running-configmay requireshow run allon some contexts. - For large fleets, use pyATS’ concurrency features (

device.connect()supports concurrent execution via testbed.run? you can useconcurrent.futuresaroundcollect_devicecalls). - Use

pygit2or rawgitCLI as shown; ensurenet-backupsrepo is initialized and has.gitignorefor secrets.

Explanation by Line

I’ll break key parts into actionable, explain-why pieces.

Directory & repo design

BACKUP_BASEstores raw timestamped retrievals (audit trail).NORMALIZED_BASEstores canonical configs (used for diffs).GIT_REPOis a working tree with device subdirectories; commit only normalized files to track semantic changes.

mask_secrets() and normalize_config()

- Mask sensitive lines early to avoid secret leakage into the repo. Regex patterns will vary by vendor syntax — expand to handle

secret,enable secret,username x password 0 foo,set system login...in Junos, etc. - Normalization removes noise: remove timestamped banners, counters, ephemeral lines (like session counts), and order sections predictably where feasible.

Fingerprint (hash)

- Using SHA1 of the normalized content to determine if the backup is new. This prevents noisy commits and keeps history clean.

Git commit flow

- Copy normalized file into

GIT_REPO/<device>/<fingerprint>.cfgand commit with clear message. Optionally, create a human-readable symlinklatest.cfgpointing to current fingerprint.

S3/remote push

- Offsite backup recommended — this script shows

push_to_s3()placeholder using AWS CLI. In production prefer SDK (boto3) with proper IAM role rather than shellawscall.

Error handling & summary

- Record per-device status into

backup_summary.jsonso GUI (or alerting) can show success/failure.

testbed.yml Example

Use vendor os fields so Genie uses correct parsers (if used):

testbed:

name: backup_testbed

credentials:

default:

username: admin

password: Cisco123!

devices:

R1:

os: iosxe

type: router

connections:

cli:

protocol: ssh

ip: 10.0.10.11

SW1:

os: nxos

type: switch

connections:

cli:

protocol: ssh

ip: 10.0.10.21

AR1:

os: eos

type: switch

connections:

cli:

protocol: ssh

ip: 10.0.10.31

JUN1:

os: junos

type: router

connections:

cli:

protocol: ssh

ip: 10.0.10.41

FGT1:

os: fortios

type: firewall

connections:

cli:

protocol: ssh

ip: 10.0.10.51

Security: don’t store production credentials in the file. Use environment vars or a secrets manager.

Post-validation CLI (Real expected output)

These show expected result of backup runs, file listings, Git commits, and diffs.

A. show running-config snippet (Cisco IOS-XE)

R1# show running-config Building configuration... ! hostname R1 ! username netops privilege 15 secret 5 $1$abc$abcdefg... ! interface GigabitEthernet0/0 ip address 10.0.0.1 255.255.255.0 no shutdown ! router ospf 1 network 10.0.0.0 0.0.0.255 area 0 ! end

B. Local backups folder layout after run

$ tree backups -L 2

backups/

└── R1

├── 20250828_120102_running.cfg

└── 20250829_080045_running.cfg

C. Normalized files stored in git repo

$ ls net-backups/R1 b4d6f7a29c1bf8e4f2b6d0c1.cfg latest -> b4d6f7a29c1bf8e4f2b6d0c1.cfg

D. Git commit summary (after commit)

$ git -C net-backups log --oneline -n 3 a3f2c14 Backup R1 at 20250829_080045, fingerprint b4d6f7a... d9c7b11 Backup SW1 at 20250829_080030, fingerprint e9a7c... c7b1a4d Initial commit of backup repo

E. Diff output showing config drift

$ git -C net-backups diff HEAD~1 HEAD -- R1/b4d6f7a29c1bf8e4f2b6d0c1.cfg diff --git a/R1/old.cfg b/R1/new.cfg @@ -12,7 +12,7 @@ interface GigabitEthernet0/0 ip address 10.0.0.1 255.255.255.0 no shutdown ! -router ospf 1 +router ospf 2 network 10.0.0.0 0.0.0.255 area 0

F. Backup summary JSON example

{

"ts": "2025-08-29T08:00:45.123456",

"summary": {

"R1": {"status": "CHANGED", "fingerprint": "b4d6f7a29c1bf8e4f2b6d0c1"},

"SW1": {"status": "UNCHANGED", "fingerprint": "e9a7c..."},

"AR1": {"status": "ERROR", "error": "SSH authentication failed"}

}

}

Appendix — Extra practical tips & automation add-ons

A. Cron + Logging

Use systemd timer or cron to run the script and write logs:

0 * * * * /usr/bin/python3 /opt/pyats/pyats_backup.py >> /var/log/pyats_backup.log 2>&1

B. Slack Alert Example (on change)

Add to script:

import requests

def slack_alert(msg):

webhook = os.getenv('SLACK_WEBHOOK')

if webhook:

requests.post(webhook, json={'text': msg}, timeout=5)

Call when summary[name]['status']=='CHANGED' to notify the team.

C. Robust uptime/fingerprinting

For better flap detection, store history per-device: maintain last N fingerprints and timestamps, compute frequency of changes and alert if a device changes more than X times in Y hours.

D. Restore playbook (safety)

Never auto-restore. Create a guided restore playbook that:

- Opens a PR or ticket with proposed changes.

- Runs config sanity tests (lint, syntax check).

- Applies change during controlled window using Ansible/pyATS

configuresequences withcommit confirmedif supported.

FAQs

1. How do I handle vendor differences in commands and formatting?

Answer: For each vendor, use the vendor-specific command set and normalization. Example:

- Junos:

show configuration | display set(orshow configurationthen parse) - Arista EOS:

show running-config - FortiGate:

show full-configuration(may require API)

Wrap collection in device-specific functions and normalize output into comparable canonical forms before fingerprinting.

2. How can I avoid committing secrets into Git?

Answer: Mask secrets before writing to the Git working tree. Implement aggressive regexes to identify secret, password, community, psk, key patterns and replace with <redacted>. Keep normalization deterministic so redaction doesn’t introduce noise. Use secret scanning tools (truffleHog) in CI to verify no secrets slip by.

3. How do I detect real changes vs meaningless differences (timestamps, counters)?

Answer: Normalization step must remove or canonicalize:

- Banners with generation time or uptime

- Interface counters and session counts

- Dynamic ACL counters

Also use structured parsing (Genie) where possible to only consider semantic config blocks.

4. How do I securely store and rotate backups?

Answer: Key points:

- Store a copy in an offsite object store (S3) with server-side encryption (SSE).

- Limit Git retention via policies, or snapshot commits into a long-term archive (encrypted tar with GPG).

- Enforce RBAC on the backup repository — only automation user should push commits, and only a few humans can force-push or delete.

5. How can I integrate backups into CI/CD and change control?

Answer: Use your Git repo as the single source of truth. For config pushes:

- Create a PR containing proposed config changes (diff shows exactly what will change).

- Run automated checks (lint, compliance) against the proposed config.

- On merge, automation can push the config via Ansible/pyATS and then verify by backing up post-change config and comparing.

6. What frequency should backups run?

Answer: Depends on change windows:

- High change environments: every 5–15 minutes or rely on syslog-driven backups.

- Stable infra: daily or hourly backups suffice.

A hybrid approach: scheduled nightly full backups + event-driven backup on config change (hooks from NetOps ticketing or CI/CD).

7. Can I validate backups via GUI as well as CLI?

Answer: Yes. Two options:

- Simple Flask UI that reads

backup_summary.jsonand displays last backup per device + diff links. - Full ELK pipeline: ingest

backup_summary.jsonand per-change documents into Elasticsearch and build Kibana dashboards with filters (device, status, last changed).

GUI is crucial for SOC/ops teams to quickly see failing devices and trending diffs.

8. How to handle very large configs or many devices concurrently?

Answer: Scale horizontally:

- Use concurrency (ThreadPoolExecutor) when collecting devices.

- Avoid loading all configs into memory; write streams to disk.

- For very large fleets, route collection to multiple automation workers and centralize results via message queue or object store.

YouTube Link

Watch the Complete Python for Network Engineer: Automated configuration backup (multi-vendor) Using pyATS for Cisco [Python for Network Engineer] Lab Demo & Explanation on our channel:

Join Our Training

If you want instructor-led, hands-on guidance to build production-grade automation pipelines — including secure backups, drift detection, GitOps workflows, and GUI dashboards — Trainer Sagar Dhawan runs a 3-month instructor-led program that teaches Python, Ansible, APIs, and Cisco DevNet for Network Engineers. You will implement end-to-end projects like this backup system, learn best practices for secrets, vaults, scaling, and production hardening.

Learn more and enroll:

https://course.networkjourney.com/python-ansible-api-cisco-devnet-for-network-engineers/

Take the next step to become a confident Python for Network Engineer practitioner — build automation that your team trusts.

Enroll Now & Future‑Proof Your Career

Email: info@networkjourney.com

WhatsApp / Call: +91 97395 21088

![[Day #50 PyATS Series] Automated Configuration Backup (Multi-Vendor) Using pyATS for Cisco [Python for Network Engineer]](https://networkjourney.com/wp-content/uploads/2025/08/Day-50-PyATS-Series-Automated-Configuration-Backup-Multi-Vendor-Using-pyATS-for-Cisco.png)

![[Day #84 PyATS Series] Multi-Vendor Golden Image Compliance Testing Using pyATS for Cisco [Python for Network Engineer]](https://networkjourney.com/wp-content/uploads/2025/09/Day-84-PyATS-Series-Multi-Vendor-Golden-Image-Compliance-Testing-Using-pyATS-for-Cisco-Python-for-Network-Engineer-470x274.png)

![[Day #98 PyATS Series] Full-Scale Mock Network Simulation (pyATS + VIRL/EVE-NG) Using pyATS for Cisco [Python for Network Engineer]](https://networkjourney.com/wp-content/uploads/2025/09/Day-98-PyATS-Series-Full-Scale-Mock-Network-Simulation-pyATS-VIRL_EVE-NG-Using-pyATS-for-Cisco-Python-for-Network-Engineer-470x274.png)