[Day #52 PyATS Series] Writing pyATS Plugins for Vendor-Specific Features using pyATS for Cisco [Python for Network Engineer]

Table of Contents

Introduction — key points

As a Python for Network Engineer, you’ll hit the same problem repeatedly: built-in pyATS/Genie capabilities are great, but your network has vendor-specific commands, telemetry, or workflows that need reusable automation. Instead of copying ad-hoc scripts into jobs, you should build plugins — self-contained Python packages that add domain logic, helpers, parsers, and testcases that other engineers can install and reuse.

In this Article you’ll learn, end-to-end:

- How to design a pyATS plugin architecture that is testable and versionable.

- How to attach vendor-specific helpers to pyATS

Deviceobjects safely. - How to create custom parsers, aetest testcases and utilities inside the plugin.

- How to package, install, run unit tests, and integrate plugin into CI.

- How to validate results both on the CLI and on a GUI dashboard (Kibana/Grafana).

- How to handle multi-vendor differences (Cisco/Arista/Palo Alto/FortiGate) while keeping a single, consistent plugin interface.

We’ll build a sample plugin called pyats_vendor_tools that demonstrates patterns you can adapt to your organization.

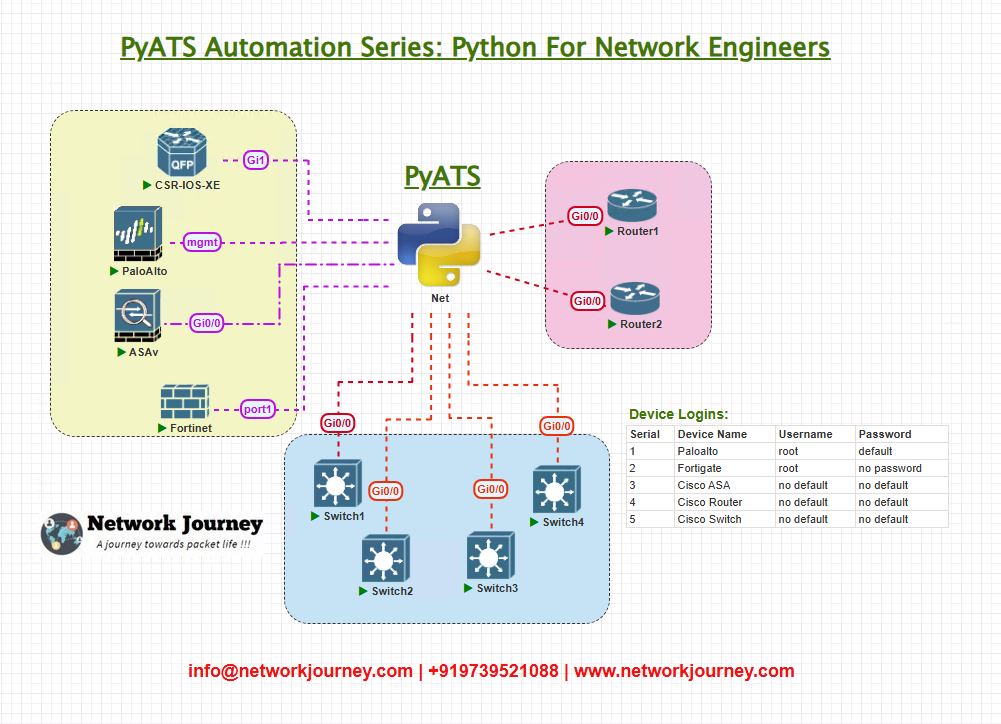

Topology Overview

Use a small management-plane testbed for development and validation:

- AutomationHost runs pyATS tests and plugin code.

- Devices: Cisco IOS-XE (R1), Arista EOS (A1), Palo Alto PAN-OS (PA1), FortiGate (FG1).

- Logs and metrics are optionally pushed to Elasticsearch/Kibana for GUI validation.

Topology & Communications

Management network: All devices reachable via SSH from AutomationHost.

pyATS communications:

- SSH via Genie device objects (

device.connect()). - Plugins will use

device.execute()for vendor commands and may calldevice.parse()when Genie parsers exist.

Data flows:

- Plugin runs on AutomationHost, connects to each device.

- Runs vendor-specific commands (exposed in plugin as

device.vendor_feature()). - Plugin parses raw output into structured JSON (Genie schema or custom dict).

- Plugin persists results to

results/, optionally pushes to ES for dashboards. - aetest testcases can assert plugin results and produce pass/fail.

Validation workflow:

- CLI: run plugin routines from pyATS job, inspect device outputs and plugin JSON artifacts.

- GUI: push plugin artifacts to ES, create Kibana panels (e.g., show QoS counters, firewall session counts, Forti CPU) to validate visually and historically.

Workflow Script — plugin skeleton + pyATS job

We’ll create a plugin package pyats_vendor_tools and a pyATS job vendor_feature_job.py that uses it. The plugin provides:

register(testbed)— attach helpers to device objects.device.vendor_feature_get()— a method attached to device at runtime.parserssubmodule with Genie-styleMetaParserclasses for unsupported outputs.aetesttestcases that use plugin helpers.

Directory layout (recommended)

pyats_vendor_tools/ ├─ pyats_vendor_tools/ │ ├─ __init__.py │ ├─ register.py │ ├─ cisco.py │ ├─ arista.py │ ├─ paloalto.py │ ├─ fortigate.py │ └─ parsers/ │ ├─ __init__.py │ ├─ cisco_custom.py │ └─ ... ├─ tests/ │ ├─ test_cisco.py │ └─ ... ├─ sample_jobs/ │ └─ vendor_feature_job.py ├─ setup.py / pyproject.toml └─ README.md

register.py — attach helpers to devices

# pyats_vendor_tools/register.py

from types import MethodType

from .cisco import CiscoToolkit

from .arista import AristaToolkit

from .paloalto import PaloAltoToolkit

from .fortigate import FortiGateToolkit

OS_MAP = {

'iosxe': CiscoToolkit,

'iosxr': CiscoToolkit,

'nxos': CiscoToolkit,

'eos': AristaToolkit,

'panos': PaloAltoToolkit,

'fortios': FortiGateToolkit

}

def register(testbed):

"""

Attach vendor toolkits to devices in the given testbed.

Usage: from pyats_vendor_tools import register; register(testbed)

"""

for name, dev in testbed.devices.items():

os_type = getattr(dev, 'os', '').lower()

toolkit_cls = None

for key, cls in OS_MAP.items():

if key in os_type:

toolkit_cls = cls

break

if not toolkit_cls:

# no toolkit for this OS — skip

continue

toolkit = toolkit_cls(dev)

# attach toolkit instance as `dev.vendor` for convenience

dev.vendor = toolkit

# attach helper methods directly (optional)

dev.get_vendor_feature = MethodType(toolkit.get_vendor_feature, dev)

Example toolkit class (Cisco)

# pyats_vendor_tools/cisco.py

import re

from .parsers.cisco_custom import CiscoCustomParser

class CiscoToolkit:

def __init__(self, device):

self.device = device

def get_vendor_feature(self, *args, **kwargs):

"""

Example helper: run a vendor-specific show and parse with custom parser.

"""

raw = self.device.execute('show platform hardware qfp active feature throughput')

parser = CiscoCustomParser()

return parser.cli(output=raw)

def collect_qos_counters(self):

# run multiple commands, return dict

raw = self.device.execute('show policy-map interface | include Service-policy')

# parse into structured results...

return {'raw_policy': raw} # example

Example vendor_feature_job.py (pyATS job)

# sample_jobs/vendor_feature_job.py

from genie.testbed import load

from pyats import aetest

from pyats_vendor_tools.register import register

class VendorFeatureTest(aetest.Testcase):

@aetest.setup

def setup(self, testbed):

register(testbed) # attach vendor helpers

self.testbed = testbed

@aetest.test

def gather_and_validate(self):

for name, dev in self.testbed.devices.items():

dev.connect()

if hasattr(dev, 'get_vendor_feature'):

parsed = dev.get_vendor_feature()

# save JSON, do assertions

with open(f'results/{name}_vendor.json', 'w') as f:

import json; json.dump(parsed, f, indent=2)

# simple assertion example:

assert parsed, f"No parsed output for {name}"

dev.disconnect()

This pattern avoids modifying pyATS internals and keeps plugin code isolated and testable.

Explanation by Line

Let’s unpack the design decisions and how this makes a robust plugin.

register(testbed)

- Purpose: central entry point. Developers call

register(testbed)at the start of a job to enrichDeviceobjects with vendor helpers. - Why not monkey-patch globally? We keep it explicit — tests call

register()so behavior is deterministic. OS_MAPmapsdevice.ossubstrings to toolkit classes. This keeps per-vendor code isolated.

Using toolkit instances

toolkit = toolkit_cls(dev): toolkit receivesdeviceand encapsulates vendor commands and parsing logic. That avoids global state.- Attaching

dev.vendor = toolkitgives user a clear namespaced API (dev.vendor.collect_qos_counters()).

Attaching bound methods

MethodType(toolkit.get_vendor_feature, dev)— attaches a bound function so the method receivesdevasselfif that’s helpful for test writers. This is optional, but convenient for one-line calls.

Parsers

CiscoCustomParser().cli(output=raw)— follows Genie parser style (returns dict). Parsers live underparsers/so they can be unit-tested and re-used by other tools.

aetest Testcase

setup: callregister(), set up environment.gather_and_validate: run helper methods, persist JSON, assert expectations.- Use pyATS

aetestto integrate with pyATS reporting and leveragepyats run jobtooling if desired.

This approach separates concerns:

- Plugin = toolkit + parsers + tests packaged as a Python module.

- Job = imports plugin and invokes it in a predictable lifecycle.

testbed.yml Example

A testbed with custom metadata (plugin may use device.custom):

testbed:

name: vendor_plugin_lab

credentials:

default:

username: devops

password: DevOps!23

devices:

R1:

os: iosxe

type: router

connections:

cli:

protocol: ssh

ip: 10.0.100.11

custom:

site: LON-DC1

vendor_tooling:

enable_feature_x: true

A1:

os: eos

type: switch

connections:

cli:

protocol: ssh

ip: 10.0.100.21

PA1:

os: panos

type: firewall

connections:

cli:

protocol: ssh

ip: 10.0.100.31

FG1:

os: fortios

type: firewall

connections:

cli:

protocol: ssh

ip: 10.0.100.41

Notes:

device.customis a flexible place to store plugin flags (supported by pyATS testbed loader).- Plugins should check

device.customto decide feature toggles.

Post-validation CLI (Real expected output)

Below are practical example outputs and the plugin’s parsed JSON — include these as screenshots in your article for visual proof.

1) Cisco raw CLI (vendor feature)

R1# show platform hardware qfp active feature throughput Feature Peak(b/s) Avg(b/s) DropPct Interface NAT 1200000 300000 0.2 TenGigE0/0/0 FW 500000 120000 1.5 TenGigE0/0/1

Plugin parsed JSON

{

"feature": {

"NAT": {"peak_bps": 1200000, "avg_bps": 300000, "drop_pct": 0.2, "interface": "TenGigE0/0/0"},

"FW": {"peak_bps": 500000, "avg_bps": 120000, "drop_pct": 1.5, "interface": "TenGigE0/0/1"}

}

}

2) Arista raw output (LLDP detail sample)

Local Intf: Ethernet1 Remote System: core1.example.com Remote Port: Eth2 TTL: 120

Parsed JSON

{"neighbors": {"Ethernet1": {"remote_system": "core1.example.com", "remote_port": "Eth2", "ttl": 120}}}

3) Palo Alto sessions

Total Sessions: 1200 TCP Sessions: 800 UDP Sessions: 350 ICMP Sessions: 50

Parsed JSON

{"sessions": {"total": 1200, "tcp": 800, "udp": 350, "icmp": 50}}

4) FortiGate perf summary

CPU usage: 12.5% spikes: 0 Memory: 4096MB total, 2048MB used

Parsed JSON

{"cpu": {"usage_percent": 12.5, "spikes_1m": 0}, "mem": {"total_mb": 4096, "used_mb": 2048}}

Validation checklist (CLI → plugin → JSON):

- Ensure raw command output saved (

results/<device>_raw.txt). - Ensure parsed JSON saved (

results/<device>_parsed.json). - Create assertion tests (e.g.,

parsed['cpu']['usage_percent'] < 80).

Packaging, CI & Production Tips (appendix — deep technical checklist)

To make this plugin production ready, follow this checklist:

- Code quality

- Linting:

flake8/blackformatting. - Type hints: add basic typing for clarity.

- Docstrings and README with examples.

- Linting:

- Unit tests

- Store sample outputs for every vendor/version you support.

- Run tests in CI on every PR (pytest).

- Packaging

pyproject.tomlorsetup.pyfor packaging.- Use

pip install .and test in a clean virtualenv. - Optional: publish to internal PyPI.

- CI/CD

- On merge: run tests → build wheel → upload to test pip repo.

- Optional: run a smoke job in staging: run a sample pyATS job against a lab testbed.

- Secrets

- Use environment variables / Vault. Provide instructions for ops to configure secrets.

- Provide a

secrets.example.envto show required env var names.

- Observability

- Send plugin execution logs to central logging (ELK).

- Index parsed JSON into ES for dashboards. Provide Kibana sample JSON export.

- Error handling

- Fail fast on connection errors but continue other devices.

- Implement retry/backoff for transient SSH failures.

- Backwards compatibility

- Use semantic versioning. If you change parser schema, bump major version and document migration steps.

FAQS

1. What exactly is a “pyATS plugin” in this context?

Answer: In this workbook a “plugin” is a well-structured, installable Python package that provides vendor-specific toolkits, parsers and aetest testcases. It doesn’t require changing pyATS internals — instead you call register(testbed) in your job to attach helpers and use them. This keeps the solution simple, safe and portable.

2. How do I attach helpers to Device objects without breaking pyATS internals?

Answer: Attach a namespaced attribute (for example device.vendor = toolkit) or bind a method with types.MethodType. Don’t override built-ins. Keep attachments explicitly created by register(testbed) so you control lifecycle and teardown.

3. Where do custom parsers belong and how should they be tested?

Answer: Put parsers under parsers/ inside your plugin. Write unit tests referencing samples/*.txt and run pytest. Use the Genie MetaParser pattern if you want schema discipline; otherwise make sure parsers return validated dictionaries.

4. How do you handle differences across vendor CLIs in a single plugin?

Answer: Implement per-vendor toolkit classes (e.g., CiscoToolkit, AristaToolkit), each with the same high-level method names (collect_telemetry(), get_sessions()), but with vendor-specific implementations. The plugin runner can pick the correct toolkit based on device.os.

5. How should I package, release and version a plugin for my team?

Answer: Use pyproject.toml + setuptools/flit, follow semantic versioning, publish to an internal PyPI or distribute via pip install -e . for dev. Maintain changelog and release notes. CI should run unit tests and lint on PRs.

6. How to validate plugin outputs in a GUI (Kibana/Grafana)?

Answer: After parsing, index JSON documents into Elasticsearch (index: vendor-tools-*) or push metrics to Prometheus via a custom exporter. Build dashboards that show the parsed fields (e.g., CPU, session counts, QoS drop_pct) so non-dev teams can validate visually.

7. Can plugins be used in production jobs and schedule-based automation?

Answer: Absolutely — once unit tested and packaged. Use cron, systemd timers, or pipeline jobs (Jenkins/GitHub Actions) to run pyATS jobs which load the plugin and perform checks. Make sure to handle failures gracefully (timeouts, retries).

8. How do I secure sensitive data (credentials, secrets) used by plugins?

Answer: Never hardcode secrets. Use pyATS credential mechanisms, environment variables, or an external Vault (HashiCorp Vault, AWS Secrets Manager). Mask any secrets before writing to logs or committing to Git.

YouTube Link

Watch the Complete Python for Network Engineer: Writing pyATS plugins for vendor-specific features Using pyATS for Cisco [Python for Network Engineer] Lab Demo & Explanation on our channel:

Join Our Training

If you want step-by-step instructor-led sessions that turn these plugin skeletons into production-grade, enterprise tools (packaging, CI, secure secrets, dashboards, remediation playbooks), Trainer Sagar Dhawan is running a 3-month instructor-led course covering Python, Ansible, APIs and Cisco DevNet for Network Engineers. The course walks through real projects — exactly like this plugin — and gives you the confidence to deploy automation safely at scale.

Learn more and enroll:

https://course.networkjourney.com/python-ansible-api-cisco-devnet-for-network-engineers/

If your goal is to become a confident Python for Network Engineer who can design, code, test and run automation for multi-vendor environments, this program will accelerate you.

Enroll Now & Future‑Proof Your Career

Email: info@networkjourney.com

WhatsApp / Call: +91 97395 21088

![[Day #52 PyATS Series] Writing pyATS Plugins for Vendor-Specific Features using pyATS for Cisco [Python for Network Engineer]](https://networkjourney.com/wp-content/uploads/2025/08/Day-52-PyATS-Series-Writing-pyATS-Plugins-for-Vendor-Specific-Features-using-pyATS-for-Cisco.png)

![Day #92 PyATS Series] Automate EVPN Fabric Validation Across Cisco/Arista/Paloalto/Fortigate Using pyATS for Cisco [Python for Network Engineer]](https://networkjourney.com/wp-content/uploads/2025/09/Day-92-PyATS-Series-Automate-EVPN-Fabric-Validation-Across-Cisco_Arista_Paloalto_Fortigate-Using-pyATS-for-Cisco-Python-for-Network-Engineer-470x274.png)

![[Day #24 PyATS Series] BGP Neighbor Validation (Multi-Vendor) Using pyATS for Cisco](https://networkjourney.com/wp-content/uploads/2025/07/Day-24-PyATS-Series-BGP-Neighbor-Validation-Multi-Vendor-Using-pyATS-for-Cisco.png)

![[Day #37 Pyats Series] Hardware inventory collection & reporting using pyATS for Cisco [Python for Network Engineer]](https://networkjourney.com/wp-content/uploads/2025/08/Hardware-inventory-collection-reporting-using-pyATS-for-Cisco.png)