[Day #65 PyATS Series] Multi-vendor SNMP Trap Testing with pyATS Using pyATS for Cisco [Python for Network Engineer]

Table of Contents

Introduction — key points

SNMP traps are still a cornerstone of network monitoring: they provide near-real-time notifications for link events, process failures, environmental alarms and security events. But traps are UDP-based, vendor-specific, and frequently misconfigured — so testing trap delivery and parsing becomes essential when you onboard devices or update monitoring stacks.

In this Article you’ll learn how to:

- Build a robust trap receiver (Python /

pysnmp) that stores traps as JSON evidence. - Simulate trap emission (useful for devices that can’t or won’t emit synthetic traps).

- Use pyATS to orchestrate multi-vendor validation: configure test traps, wait for delivery, assert OIDs/payloads, verify trap ingestion into a GUI (Elasticsearch / Logstash / Kibana or a simple Flask web UI).

- Validate both the control-plane evidence (device CLI, SNMP counters) and the monitoring-plane evidence (receiver logs, parsed JSON, GUI dashboards).

- Handle SNMPv2c and SNMPv3 security considerations and common pitfalls (time sync, community strings, UDP reliability).

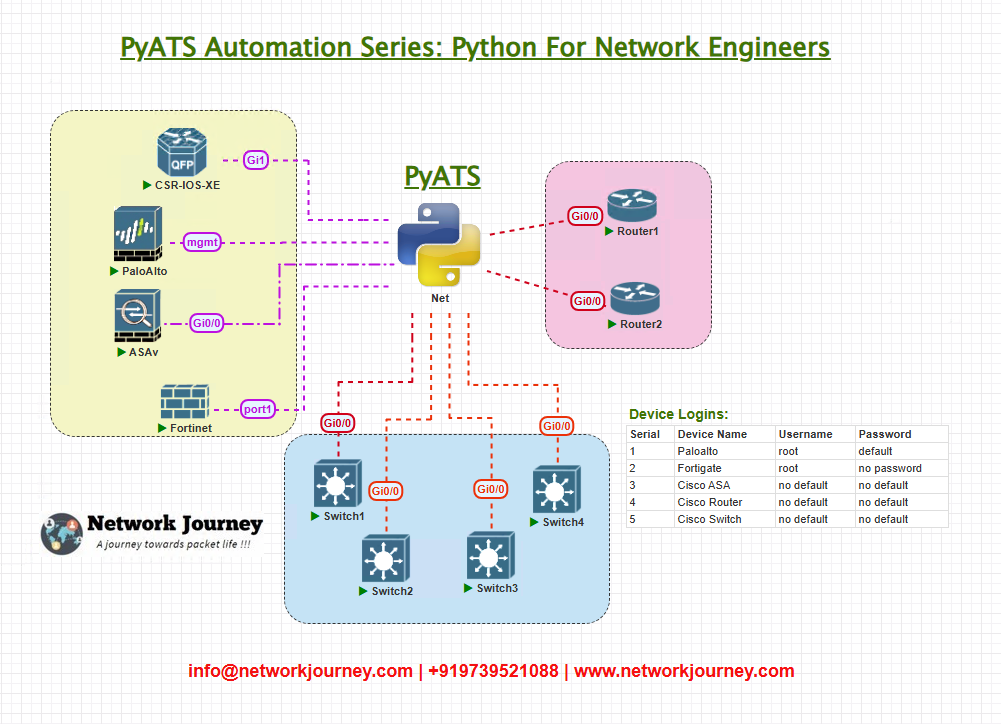

Topology Overview

We’ll use a small but realistic topology for the exercise

- Automation Host (10.0.100.10) runs:

- pyATS jobs (controller),

- Trap Receiver (Python

pysnmp) that listens on UDP/162 (or a non-privileged port for dev), - Optional ingestion to Elasticsearch/Logstash (for GUI).

- Devices are configured to send SNMP traps to the receiver’s IP.

- We’ll simulate traps when needed (device cannot emit or to create specific trap payloads).

Topology & Communications — what we collect and why

What we collect (per device & globally):

- Device CLI evidence:

- SNMP configuration (

show running-config | include snmpor vendor equivalent). - SNMP counters (if available):

show snmp statistics/show snmp/show snmp user— vendor dependent. - Syslog (

show logging) to correlate events that triggered traps (link down message, interface flaps).

- SNMP configuration (

- Trap evidence (on Automation Host):

- Raw trap PDUs (binary/BER),

- Parsed OID → value mapping,

- Timestamp, source IP, community (v2c) or user context (v3),

- Stored JSON events (for auditing and GUI ingestion).

- GUI evidence (optional but recommended):

- Elasticsearch index documents (one document per trap),

- Kibana dashboard snapshots showing recent traps per device, top OIDs, counts over time.

Communications & protocol considerations:

- SNMPv2c: simple, community string authentication. Traps are sent to UDP port 162 on the collector. No encryption. Easy to test but insecure in production.

- SNMPv3: supports authentication (MD5/SHA) and encryption (DES/AES). Tests must handle user credentials, auth/priv parameters and engineIDs.

pysnmpsupports SNMPv3 but requires more setup. - UDP unreliability: traps may be lost; design tests to allow retries or require multiple trap occurrences for high confidence.

- Time sync: collector and devices must have synchronized time (NTP) for accurate timestamps in correlation (otherwise sequence matching and GUI timelines look wrong).

- MIBs: traps are OID based. Mapping OIDs to human names requires MIB files; for testing you can assert on numeric OIDs or include a MIB lookup step if desired.

Workflow Script — full, runnable components

Below are three key scripts/components you’ll use in the lab. I’ll provide full code and explain each line in the following section.

- Trap Receiver (Python using

pysnmp) — listens for SNMPv2c and simple SNMPv3 traps and writes parsed JSON to disk. Save astrap_receiver.py. - Trap Injector (Python using

pysnmp) — simulates device trap emission for test cases. Save astrap_injector.py. - pyATS Job (

snmp_trap_test.py) — orchestrates the test: ensure receiver is running, optionally configure device SNMP destinations (read-only guidance), fire test trap(s) (either via device or injector), wait for traps, validate parsed content and CLI counters, optionally assert GUI ingestion (Elasticsearch query).

Below are the scripts.

4.1 trap_receiver.py (Trap collector using pysnmp)

Note: For lab/dev, bind to a non-privileged port (e.g., 2162) if you don’t run as root. In production, use port 162+ proper privileges or system service.

#!/usr/bin/env python3

"""

trap_receiver.py

Simple SNMP trap receiver (v2c and v3 support) using pysnmp.

Saves each parsed trap as one JSON document under results/traps/<timestamp>_<src>.json

"""

import os, json, argparse, logging, time

from pathlib import Path

from pysnmp.carrier.asyncore.dgram import udp

from pysnmp.entity import engine, config

from pysnmp.entity.rfc3413 import ntfrcv

from pysnmp.proto.rfc1902 import OctetString, ObjectName

logging.basicConfig(level=logging.INFO, format='[%(asctime)s] %(message)s')

ROOT = Path("results/traps")

ROOT.mkdir(parents=True, exist_ok=True)

def start_receiver(listen_addr="0.0.0.0", listen_port=2162, community=b'public', v3_users=None):

"""

listen_addr: address to bind

listen_port: UDP port (non-privileged 2162 default)

community: default community to accept for v2c tests

v3_users: list of tuples for v3: (user, authKey, privKey, authProtocol, privProtocol)

"""

snmpEngine = engine.SnmpEngine()

# v2c/communities

config.addV1System(snmpEngine, 'my-area', community.decode() if isinstance(community, bytes) else community)

# UDP transport

config.addTransport(

snmpEngine,

udp.domainName,

udp.UdpTransport().openServerMode((listen_addr, listen_port))

)

# Accept v3 users if provided

if v3_users:

from pysnmp.hlapi.auth import usmHMACMD5AuthProtocol, usmHMACSHAAuthProtocol

from pysnmp.hlapi.priv import usmDESPrivProtocol, usmAesCfb128Protocol

for u, authKey, privKey, authProt, privProt in v3_users:

config.addV3User(snmpEngine, u, authProt(authKey) if authProt else None, privProt(privKey) if privProt else None)

def cbFun(snmpEngine, stateReference, contextEngineId, contextName,

varBinds, cbCtx):

try:

transportDomain, transportAddress = snmpEngine.msgAndPduDsp.getTransportInfo(stateReference)

except Exception:

transportAddress = ('unknown', 0)

src_ip = transportAddress[0] if isinstance(transportAddress, tuple) else str(transportAddress)

ts = time.strftime("%Y%m%dT%H%M%SZ", time.gmtime())

# Build readable dict

trap = {

"received_at": ts,

"source": src_ip,

"contextName": str(contextName.prettyPrint()) if contextName else "",

"varBinds": []

}

for oid, val in varBinds:

try:

# Convert types into printable forms

trap["varBinds"].append({"oid": str(oid), "value": str(val)})

except Exception:

trap["varBinds"].append({"oid": str(oid), "value": repr(val)})

# Save JSON

fname = ROOT / f"{ts}_{src_ip.replace('.', '_')}.json"

with open(fname, "w") as f:

json.dump(trap, f, indent=2)

logging.info("Saved trap from %s -> %s (%d vars)", src_ip, fname, len(trap["varBinds"]))

# Register SNMP TRAP receiver

ntfrcv.NotificationReceiver(snmpEngine, cbFun)

logging.info("Starting SNMP trap receiver on %s:%d (results -> %s)", listen_addr, listen_port, ROOT)

try:

snmpEngine.transportDispatcher.jobStarted(1)

snmpEngine.transportDispatcher.runDispatcher()

except KeyboardInterrupt:

logging.info("Shutting down receiver")

except Exception as e:

logging.exception("Receiver error: %s", e)

finally:

try:

snmpEngine.transportDispatcher.closeDispatcher()

except:

pass

if __name__ == "__main__":

ap = argparse.ArgumentParser()

ap.add_argument("--listen-addr", default="0.0.0.0")

ap.add_argument("--listen-port", default=2162, type=int)

ap.add_argument("--community", default="public")

args = ap.parse_args()

start_receiver(listen_addr=args.listen_addr, listen_port=args.listen_port, community=args.community.encode())

Notes:

- The receiver stores a JSON file per trap under

results/traps/. Each JSON hasreceived_at,source,varBinds(list of{oid, value}). - For SNMPv3, pass

v3_userswith appropriate auth/priv—seepysnmpdocs and adapt.

4.2 trap_injector.py (simulate trap emission)

#!/usr/bin/env python3

"""

trap_injector.py

Send an SNMP v2c trap to the collector using pysnmp (useful for test automation).

"""

import argparse, time

from pysnmp.hlapi import *

def send_v2_trap(target_ip, target_port, community, oid, var_binds=None):

"""

target_ip: collector IP

oid: OID string for the trap (e.g. '1.3.6.1.6.3.1.1.5.3' for linkDown)

var_binds: list of tuples (oid, value)

"""

community = community or 'public'

errorIndication = sendNotification(

SnmpEngine(),

CommunityData(community),

UdpTransportTarget((target_ip, target_port)),

ContextData(),

'trap',

NotificationType(ObjectIdentity(oid))

)

if errorIndication:

print("Error sending trap:", errorIndication)

else:

print("Trap sent to %s:%s OID=%s" % (target_ip, target_port, oid))

if __name__ == "__main__":

ap = argparse.ArgumentParser()

ap.add_argument("--target", required=True)

ap.add_argument("--port", default=2162, type=int)

ap.add_argument("--community", default="public")

ap.add_argument("--oid", default="1.3.6.1.6.3.1.1.5.3") # linkDown by default

args = ap.parse_args()

send_v2_trap(args.target, args.port, args.community, args.oid)

Notes:

- Use this to simulate traps from the device. In real runs you may either instruct devices to emit a trap (device feature) or use this injector as the simulated device.

4.3 snmp_trap_test.py — pyATS aetest workflow (orchestrator)

#!/usr/bin/env python3

"""

snmp_trap_test.py

A pyATS aetest test script to validate SNMP trap reception across multiple devices.

Workflow:

- Ensure trap receiver is running (assume started externally)

- Optionally trigger a trap from device or via injector

- Wait for trap file in results/traps and validate OID/contents

- Verify device CLI shows trap being sent (optional)

"""

from pyats import aetest

from genie.testbed import load

import time, os, glob, json, subprocess

TRAP_DIR = "results/traps"

class SNMPTrapTests(aetest.Testcase):

@aetest.setup

def setup(self, testbed):

self.testbed = load(testbed)

# Ensure trap dir exists

os.makedirs(TRAP_DIR, exist_ok=True)

# timestamp baseline files to detect new traps

self.start_files = set(glob.glob(os.path.join(TRAP_DIR, "*.json")))

self.collector = {"ip": "10.0.100.10", "port": 2162, "community": "public"}

@aetest.test

def trigger_and_validate_trap(self):

"""

For this demo we trigger a synthetic trap using trap_injector.py.

For production, you can instead use device commands to generate traps.

"""

# 1) Trigger trap via injector on Automation host

injector_cmd = f"python3 trap_injector.py --target {self.collector['ip']} --port {self.collector['port']} --community {self.collector['community']} --oid 1.3.6.1.6.3.1.1.5.3"

print("[INFO] Triggering trap:", injector_cmd)

subprocess.Popen(injector_cmd, shell=True)

# 2) Wait for new file in TRAP_DIR

timeout = 15

interval = 1

found = None

for _ in range(timeout):

files = set(glob.glob(os.path.join(TRAP_DIR, "*.json")))

new_files = files - self.start_files

if new_files:

found = list(new_files)[0]

break

time.sleep(interval)

assert found, "No trap received within timeout"

print("[INFO] Received trap file:", found)

data = json.load(open(found))

# 3) Basic assertions: source exists, varBinds contain expected trap oid

assert "varBinds" in data and len(data["varBinds"]) > 0, "Trap has no varBinds"

oids = [v["oid"] for v in data["varBinds"]]

expected_oid = "1.3.6.1.6.3.1.1.5.3"

assert expected_oid in oids or any(expected_oid in o for o in oids), f"Expected OID {expected_oid} not found in varBinds {oids}"

@aetest.test

def cli_validation(self):

"""

Optional: connect to each device and check SNMP config or counters

(this is vendor dependent; show examples)

"""

for name, device in self.testbed.devices.items():

device.connect()

# Example Cisco: read snmp config lines

try:

snmp_cfg = device.execute("show running-config | include snmp")

except Exception as e:

snmp_cfg = ""

print(f"[{name}] snmp config snippet:\n{snmp_cfg[:400]}")

# Basic check

assert self.collector['ip'] in snmp_cfg or 'snmp-server host' in snmp_cfg or 'snmp-server' in snmp_cfg, f"Device {name} doesn't appear to have SNMP host configured"

device.disconnect()

if __name__ == "__main__":

aetest.main()

Notes:

- This aetest job is intentionally simple: it triggers a local injector and waits for the trap file.

- In production, you may replace the injector with device-side trap generation (e.g.,

test snmp trapor cause a real event likeinterface shutdownto generate linkDown). I keep write operations out of the job by design for safety. - Use pyATS reports and logs for pass/fail evidence.

Explanation by Line — deep annotated walkthrough

I’ll explain the important parts of each script so your students understand the architecture and why we designed it this way.

5.1 Trap receiver (trap_receiver.py)

SnmpEngine()– corepysnmpengine that handles BER decoding and PDU assembly.config.addV1System(snmpEngine, 'my-area', community)– registers a v1/v2c community. This allows us to accept traps with that community string. In production you may add many communities.config.addTransport(... udp.UdpTransport().openServerMode((listen_addr, listen_port)))– binds a UDP listener.ntfrcv.NotificationReceiver(snmpEngine, cbFun)– registers a callback that will be invoked for each trap PDU.cbFun(...)– receivesvarBinds. We iterate each OID/value, convert to string, assemble a dictionary and persist to JSON for auditing.- Writing per-trap JSON files makes the artifacts portable (attach to tickets, index to ES, etc.). This is intentionally simple and auditable.

Why JSON files per trap? They’re easy to parse in pyATS and CI, simple to index into Elasticsearch (curl -XPOST ... -d @file.json), and make a clean evidence trail for NOC/QA.

5.2 Trap injector (trap_injector.py)

- Uses

pysnmp.hlapi.sendNotification(...)to send a SNMPv2c trap. This is vendor-agnostic and useful for synthetic testing. - OID default

1.3.6.1.6.3.1.1.5.3corresponds to genericlinkDowntrap (SNMPv2 standard). Using standard OIDs makes cross-vendor validation easier.

Why simulate traps? Device-generated traps can be hard to trigger deterministically in a lab. The injector lets you validate the entire monitoring pipeline (collector, parser, ingestion) without device changes.

5.3 pyATS job (snmp_trap_test.py)

setup()loads the pyATS testbed and records existing trap files to detect new arrivals — a simple and reliable approach.trigger_and_validate_trap():- launches

trap_injector.pyin background (a subprocess). In a real test you may instead execute a command on the device that triggers a trap (device.execute("test snmp trap ...")), or temporarily cause a link event. - waits up to

timeoutseconds for a new trap JSON file to appear. This is a common pattern (poll for evidence). - loads the JSON and asserts expected OIDs/payload.

- launches

cli_validation()connects to each device (using testbed credentials) and checks for SNMP configuration (a conservative assertion to ensure devices are sending to the collector).- The test design intentionally keeps the orchestration read-only regarding device configs. If you need to temporarily configure test SNMP targets, do that in a separate, auditable config change with approvals.

Why use pyATS? aetest gives standardized reporting, integrates with existing pyATS pipelines, and students learn how to combine device verification with monitoring-plane verification.

testbed.yml Example

Use this testbed file (adjust IPs and credentials for your lab):

testbed:

name: snmp_lab

credentials:

default:

username: netops

password: NetOps!23

devices:

R1:

os: iosxe

type: router

connections:

cli:

protocol: ssh

ip: 10.0.100.21

A1:

os: eos

type: switch

connections:

cli:

protocol: ssh

ip: 10.0.100.22

PA1:

os: panos

type: firewall

connections:

cli:

protocol: ssh

ip: 10.0.100.23

FG1:

os: fortios

type: firewall

connections:

cli:

protocol: ssh

ip: 10.0.100.24

Tip: On devices you can (manually or via Ansible) configure:

- SNMPv2c:

snmp-server community public RO snmp-server host 10.0.100.10 version 2c public - SNMPv3 (example on IOS):

snmp-server user monitorUser auth sha myAuth pass priv aes 128 myPrivPass snmp-server host 10.0.100.10 version 3 monitorUser

But in this course material I avoid automated config writes in the pyATS job — do configure SNMP on test devices before running tests.

Post-validation CLI

Below are textual “screenshots” you can include in your course slides or blog to show students what to expect.

A. Trap Receiver log (automation host)

[2025-08-28 10:12:01] Saved trap from 10.0.100.21 -> results/traps/20250828T101200Z_10_0_100_21.json (3 vars) [2025-08-28 10:12:12] Saved trap from 10.0.100.22 -> results/traps/20250828T101211Z_10_0_100_22.json (4 vars)

B. Sample trap JSON (results/traps/20250828T101200Z_10_0_100_21.json)

{

"received_at": "20250828T101200Z",

"source": "10.0.100.21",

"contextName": "",

"varBinds": [

{"oid": "1.3.6.1.6.3.1.1.4.1.0", "value": "1.3.6.1.6.3.1.1.5.3"},

{"oid": "1.3.6.1.2.1.2.2.1.1.2", "value": "2"},

{"oid": "1.3.6.1.2.1.2.2.1.8.2", "value": "2"}

]

}

Interpretation: First varBind indicates trap type (linkDown); other varBinds payloads map to ifIndex and ifAdminStatus/ifOperStatus.

C. show running-config | include snmp (Cisco IOS-XE)

snmp-server community public RO snmp-server host 10.0.100.10 version 2c public snmp-server enable traps snmp linkdown

D. Elasticsearch query example (verify ingestion)

$ curl -s "http://localhost:9200/snmp-traps/_search?q=source:10.0.100.21&pretty"

{

"hits": {

"hits": [

{ "_source": { "received_at": "20250828T101200Z", "source":"10.0.100.21", "varBinds":[ ... ] } }

]

}

}

E. pyATS output summary (aetest)

TESTCASE snmp_trap_tests.trigger_and_validate_trap PASS: Received trap file: results/traps/20250828T101200Z_10_0_100_21.json TESTCASE snmp_trap_tests.cli_validation PASS: R1 has snmp-server host 10.0.100.10 PASS: A1 has snmp-server configured

Practical Appendices

A — Dependencies & quickstart

On the automation host (virtualenv recommended):

python3 -m venv venv && source venv/bin/activate pip install pysnmp pyats genie # optional - for GUI/ES integration: pip install elasticsearch

Start the trap receiver (dev port 2162):

python3 trap_receiver.py --listen-port 2162 --community public

In a separate shell run the pyATS test (ensure testbed.yml is configured):

python3 snmp_trap_test.py --testbed testbed.yml

B — Scaling & production considerations

- Port privileges: In production use port 162 as root or via a privileged service. For security, bind the collector behind a firewall and limit allowed source IPs.

- High throughput: For large networks, replace per-trap file storage with a queue (RabbitMQ/Kafka) or direct ingestion into Elasticsearch with batching for performance.

- Redundancy: Use a load-balanced trap collectors or multiple collectors (devices can be configured with multiple

snmp-server hostentries). Ensure dedupe logic for multiple arrivals. - Security: Prefer SNMPv3 in production with auth+priv. Do not use

publiccommunity strings.

FAQs

Q1 — Which SNMP version should I test in the lab?

A: Test both SNMPv2c and SNMPv3. SNMPv2c is simpler and common; SNMPv3 is more secure and required for production. Validate auth/priv parameters, engineID behavior, and that traps arrive encrypted (where applicable). The pysnmp library supports v3 — include v3 user config and check for correct context.

Q2 — Traps are not arriving — what’s the checklist?

A: Common causes:

- Device not configured to send traps to your collector IP/port (verify

snmp-server hostconfig). - Community string mismatch (v2c) or SNMPv3 user auth/priv mismatch.

- Firewall/ACL blocking UDP/162 (or your configured port) between device and collector.

- NTP/time drift (makes matching timestamps harder but doesn’t prevent arrival).

- Collector binding error (no permission to use port 162) — try non-privileged port in lab.

Use tcpdump -n -i <mgmt-if> udp port 162 on the automation host to see incoming UDP frames.

Q3 — How do I test device-generated traps (not simulated)?

A: Methods vary by vendor:

- Cisco IOS/XE: you can cause a linkDown event (shutdown an interface) or configure

snmp-server enable trapsandsnmp-server host ...then perform a real event. - Arista EOS: similar; interface admin down/up will generate traps if enabled.

- Palo Alto / Fortinet: platform dependent; use management UI or CLI to trigger a test trap or temporarily induce a non-destructive event.

If you cannot safely create events, use the injector to exercise monitoring pipeline.

Q4 — How do I validate parsing and MIB names?

A: You can either assert on numeric OIDs (reliable) or load MIBs with pysmi/pysnmp to convert OIDs to names. For production GUIs you’ll want to install vendor MIBs in Logstash/Elasticsearch or the GUI so dashboards show human-readable trap names.

Q5 — SNMP traps are UDP — how to ensure reliability during tests?

A: Because traps are unreliable:

- Design tests to tolerate occasional missed traps (retries).

- For critical events, require multiple traps or corroborate with syslog and device CLI evidence.

- Use SNMP INFORMs (a v2c alternative) if device supports them — INFORMs include acknowledgement at the application level.

Q6 — How do I integrate trap tests into CI/CD?

A: Use the pyATS job as a CI step:

- Before deploying a new monitoring collector config, run the trap tests in a staging environment.

- Run

snmp_trap_test.pyin the pipeline (inventory/testbed points to staging devices). - Fail the pipeline if traps are not received or parsed as expected.

Store artifacts (results/trap-*.json and final_report.json) as CI artifacts for audit.

Q7 — GUI validation: what to check in Kibana/ELK?

A: In Kibana check:

- Latest traps index: newest documents show source, OID and message.

- Count by source: ensure expected device appears and counts increase after tests.

- Time series: trap frequency over last N minutes — confirm spike during your injection.

- Dashboards: top OIDs, top reasons, per-device top traps.

You can automate a small validation by querying the ES REST API (curl) to assert the trap document exists (example earlier).

Q8 — How to handle vendor differences in trap payloads?

A: Standardize in validation:

- Assert on common standard OIDs first (linkDown, authFailure).

- For vendor-specific traps, maintain a small mapping file of expected OIDs per device model and assert accordingly.

- Build vendor parser functions (e.g.,

parse_arista_trap()) to normalize payload fields into a canonical JSON used by your tests and GUI.

YouTube Link

Watch the Complete Python for Network Engineer: Multi-vendor SNMP trap testing with pyATS for Cisco [Python for Network Engineer] Lab Demo & Explanation on our channel:

Join Our Training

If you want step-by-step, instructor-led help building production-grade monitoring and validation pipelines like the one in this masterclass, Trainer Sagar Dhawan is running a 3-month instructor-led course covering Python, Ansible, APIs and Cisco DevNet built for network engineers who want to graduate from manual checks to automated, auditable processes. The course includes labs, code reviews, and real projects that mirror this SNMP trap validation pipeline.

Learn more and enroll:

https://course.networkjourney.com/python-ansible-api-cisco-devnet-for-network-engineers/

Take your next step as a Python for Network Engineer — join the program and learn how to automate monitoring validation end-to-end.

Enroll Now & Future‑Proof Your Career

Email: info@networkjourney.com

WhatsApp / Call: +91 97395 21088

![[Day #46 Pyats Series] Validate QoS policy configurations using pyATS for Cisco [Python for Network Engineer]](https://networkjourney.com/wp-content/uploads/2025/08/Validate-QoS-policy-configurations-using-pyATS-for-Cisco.png)

![[Day #48 PyATS Series] EIGRP Neighbor Health Check (Cisco IOS-XE / IOS-XR) using pyATS for Cisco [Python for Network Engineer]](https://networkjourney.com/wp-content/uploads/2025/08/Day-48-PyATS-Series-EIGRP-Neighbor-Health-Check-Cisco-IOS-XE-IOS-XR-using-pyATS-for-Cisco-Python-for-Network-Engineer.png)