Should You Upgrade to Spine-Leaf Network Design in 2025? [CCNP Enterprise]

If you’ve been working in networking for a while—especially on legacy campus networks—you’ve probably hit that scaling wall. You know the one: too many hops, inconsistent latency, unpredictable East-West traffic behavior, and painful troubleshooting. That’s where Spine-Leaf architecture enters the picture. In this post, I’ll break it all down: what it is, why it matters, where it fits, how to configure it in EVE-NG, and whether 2025 is the year you should upgrade your network.

Let’s demystify this powerful design and decide if it’s right for your network!

Table of Contents

Theory in Brief – What Is Spine-Leaf Architecture?

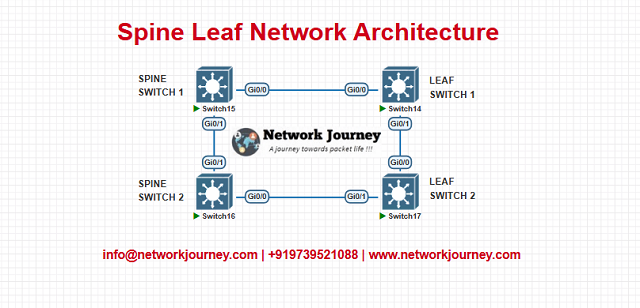

Spine-Leaf is a modern Layer 3 network design primarily used in data centers and high-performance environments. Unlike traditional Three-Tier architectures (Core-Distribution-Access), Spine-Leaf uses just two layers:

Leaf Switches: Connect to endpoints (servers, firewalls, routers).

Spine Switches: Interconnect leaf switches.

Each leaf connects to every spine, creating a full mesh between the two layers. There’s no direct leaf-to-leaf or spine-to-spine connection.

This design provides predictable latency, non-blocking bandwidth, and horizontal scalability. It works beautifully with VXLAN, SDN, and EVPN-based overlays in data center fabrics.

Compared to legacy topologies, Spine-Leaf handles East-West traffic (server-to-server) much better than North-South (client-to-server) dominant designs.

Summary – Comparison & Pros/Cons

| Feature | Spine-Leaf Architecture | Traditional Three-Tier Architecture |

|---|---|---|

| Layers | 2 (Spine + Leaf) | 3 (Core + Distribution + Access) |

| Traffic Flow | East-West optimized | North-South optimized |

| Scalability | High – Add more spines or leaves | Medium – Limited by hierarchical layers |

| Complexity | Moderate (initially) | Simple (familiar design) |

| Cabling | Higher (Full mesh) | Less cabling |

| Convergence Speed | Faster (ECMP + dynamic routing) | Slower due to STP and hop count |

| Protocol Compatibility | VXLAN, EVPN, BGP, OSPF | STP, HSRP, EIGRP |

| Use Case | Data centers, cloud networks | Campus, branch, enterprise edge |

| Fault Tolerance | High | Moderate |

Essential CLI Commands (Cisco NX-OS / IOS-XE)

| Task | CLI Command | Description |

|---|---|---|

| View interfaces | show ip interface brief | Check uplinks/downlinks |

| Check routing protocol status | show ip ospf neighbor or show bgp summary | Check underlay routing |

| Verify ECMP paths | show ip route <prefix> | View multiple paths |

| Configure OSPF for underlay | router ospf 10network 10.0.0.0 0.0.0.255 area 0 | Enables OSPF on interfaces |

| Enable PIM for multicast spine-leaf | ip pim sparse-mode | For multicast fabric |

| Troubleshoot reachability | ping / traceroute | Basic IP connectivity check |

| VXLAN verification | show nve peersshow nve interface | VXLAN underlay/overlay validation |

| Interface role check | show cdp neighbors | Check connected switches |

Real-World Use Case

| Organization Type | Challenge | Spine-Leaf Solution |

|---|---|---|

| Cloud Hosting Provider | High latency in East-West traffic | Uniform low-latency with leaf-spine design |

| Financial Data Center | Redundant paths causing STP bottlenecks | Layer 3 ECMP replaces STP limitations |

| E-Commerce Giant | Fast scaling needs for peak traffic | Add more leaves/spines without disruption |

| Enterprise with VMware | Complex VM migrations across racks | VXLAN over spine-leaf for L2/L3 mobility |

EVE-NG Lab – Spine-Leaf Simulation

Lab Objective

Simulate a basic 2-spine and 2-leaf setup with OSPF underlay and verify ECMP routing.

Topology

Each leaf connects to both spines. Servers (loopbacks) are attached to leaf switches.

Sample CLI Config – Spine1

hostname Spine1 interface Ethernet1/1 ip address 10.1.1.1 255.255.255.0 interface Ethernet1/2 ip address 10.1.2.1 255.255.255.0 router ospf 10 network 10.1.1.0 0.0.0.255 area 0 network 10.1.2.0 0.0.0.255 area 0

Sample CLI Config – Leaf1

hostname Leaf1 interface Ethernet1/1 ip address 10.1.1.2 255.255.255.0 interface Ethernet1/2 ip address 10.1.3.1 255.255.255.0 interface Loopback0 ip address 1.1.1.1 255.255.255.255 router ospf 10 network 10.1.1.0 0.0.0.255 area 0 network 10.1.3.0 0.0.0.255 area 0 network 1.1.1.1 0.0.0.0 area 0

Repeat similar configs for Spine2 and Leaf2 with their own subnets and loopbacks.

Lab Validation

Leaf2# show ip route 1.1.1.1 ! Should show ECMP via both Spine1 and Spine2

This confirms redundancy and routing path optimization.

Troubleshooting Tips

| Symptom | Likely Cause | Command or Fix |

|---|---|---|

| Traffic not reaching leaf device | Underlay routing broken | show ip ospf neighbor, ping, traceroute |

| Unequal load balancing | Interfaces not in same cost path | Check interface OSPF cost |

| Loopbacks unreachable | Missing network statement | Verify router ospf config |

| Only one ECMP path seen | Spine not peering or down | Check interfaces and routing |

| VXLAN not forming | NVE or VTEP config issue | show nve peers, show nve interface |

Frequently Asked Questions (FAQ)

1. What is Spine-Leaf Architecture and how is it different from traditional networks?

Answer:

Spine-Leaf is a modern Layer 3-centric network design where every Leaf switch connects to every Spine switch, forming a non-blocking, fully meshed fabric.

- Unlike Three-Tier (Core, Distribution, Access), Spine-Leaf eliminates bottlenecks by evenly distributing traffic.

- It’s widely used in data centers, especially those requiring high throughput and low latency for east-west traffic.

2. What are the primary benefits of adopting Spine-Leaf in 2025?

Answer:

- Scalability: Easily add new Leaf switches without redesigning the network.

- High Performance: Uniform latency and bandwidth across the fabric.

- Redundancy: Multiple paths reduce single points of failure.

- Simplified Automation: Works well with SDN controllers like Cisco ACI.

- Predictable Behavior: Deterministic performance due to consistent pathing.

3. Is Spine-Leaf only suitable for data centers?

Answer:

Primarily, yes—but it’s expanding into campus and edge networks too.

Organizations with distributed workloads, multi-tier applications, or microservices benefit the most.

It’s not ideal for small offices or environments with fewer devices or limited budget.

4. How does traffic flow in a Spine-Leaf network?

Answer:

- North-South traffic (client-to-server) flows through Leaf → Spine → Leaf.

- East-West traffic (server-to-server) also uses the same path, ensuring even distribution.

There’s no direct Leaf-to-Leaf or Spine-to-Spine connection—this promotes symmetric routing and equal cost multipath (ECMP).

5. Does Spine-Leaf require Layer 3 everywhere?

Answer:

Yes, in most modern implementations, routing is done between Leaf and Spine using OSPF, IS-IS, or BGP.

This ensures loop prevention, faster convergence, and simpler spanning tree configurations (or eliminates the need for STP altogether in Layer 3 fabric).

6. What are the hardware requirements for Spine-Leaf?

Answer:

- Leaf switches: Top-of-rack (ToR) switches with uplinks to every Spine.

- Spine switches: High-throughput switches that connect to every Leaf.

You’ll need higher port density, 10/40/100G uplinks, and sometimes VXLAN/EVPN capable gear if virtual overlay is used.

7. How does Spine-Leaf work with virtualization and overlays like VXLAN?

Answer:

Spine-Leaf integrates seamlessly with VXLAN overlays, separating underlay and overlay traffic.

- Leaf switches act as VXLAN Tunnel Endpoints (VTEPs).

- The design supports network segmentation and multi-tenancy.

Cisco ACI or NSX-T commonly use this model to deploy dynamic overlays over the physical fabric.

8. Is Spine-Leaf cost-effective for mid-sized networks?

Answer:

Initially, no—costs are higher due to increased hardware and routing complexity.

However, in the long run, it offers:

- Reduced downtime

- Simplified growth

- Better support for hybrid cloud & SDN

If your network is growing fast, the ROI can justify the expense.

9. What are common protocols used in a Spine-Leaf topology?

Answer:

- Underlay: OSPF, IS-IS, BGP

- Overlay: VXLAN, EVPN

- Policy & Control: LISP (in some designs), MP-BGP

These protocols help in routing, segmentation, tenant isolation, and policy enforcement.

10. Should I consider migrating from Three-Tier to Spine-Leaf in 2025?

Answer:

If your network faces:

- Frequent traffic bottlenecks

- Scalability limitations

- Manual provisioning challenges

Then yes, Spine-Leaf is a future-proof solution.

Especially with hybrid cloud, containers, and Zero Trust security becoming essential, Spine-Leaf offers the agility and automation support modern enterprises need.

YouTube Link

Watch the Complete CCNP Enterprise: Should You Upgrade to Spine-Leaf Network Design in 2025 on our channel:

Final Note

Understanding how to differentiate and implement Should You Upgrade to Spine-Leaf Network Design is critical for anyone pursuing CCNP Enterprise (ENCOR) certification or working in enterprise network roles. Use this guide in your practice labs, real-world projects, and interviews to show a solid grasp of architectural planning and CLI-level configuration skills.

If you found this article helpful and want to take your skills to the next level, I invite you to join my Instructor-Led Weekend Batch for:

CCNP Enterprise to CCIE Enterprise – Covering ENCOR, ENARSI, SD-WAN, and more!

Get hands-on labs, real-world projects, and industry-grade training that strengthens your Routing & Switching foundations while preparing you for advanced certifications and job roles.

Email: info@networkjourney.com

WhatsApp / Call: +91 97395 21088

Upskill now and future-proof your networking career!

![Should You Upgrade to Spine-Leaf Network Design in 2025? [CCNP Enterprise]](https://networkjourney.com/wp-content/uploads/2025/06/Should-You-Upgrade-to-Spine-Leaf-Network-Design_networkjourney.png)

![Redundancy Unleashed: Keeping Enterprise Networks Always-On [CCNP Enterprise]](https://networkjourney.com/wp-content/uploads/2025/06/Redundancy-Unleashed_-Keeping-Enterprise-Networks-Always-On_networkjourney.png)

![VPN 0 and VPN 512 in Cisco SD-WAN: Backbone & Management Explained[CCNP ENTERPRISE]](https://networkjourney.com/wp-content/uploads/2025/06/nj-blog-post-vpn0-vpn512.jpg)