Are Hypervisors Replacing Bare Metal in 2025? Here’s What You Should Know [CCNP ENTERPRISE]

Today we’re diving into a topic I often discuss with students, clients, and fellow engineers — should you deploy your network on a hypervisor or go bare metal?

When I started building labs and handling client deployments, I thought bare metal was always the “pro” way. But the reality is — it depends. Each model has its own pros, trade-offs, and best-fit scenarios. So in this post, I’ll break down Hypervisor-based vs Bare Metal deployments, show real-world applications, demonstrate a small lab on EVE-NG, and give you enough info to decide what suits your use case best.

Whether you’re into virtualization, data centers, or simply want to prep for interviews or certifications — let’s get started!

Table of Contents

Theory in Brief: Understanding the Basics

What Is a Hypervisor-Based Deployment?

A hypervisor-based network deployment runs virtualized network functions (routers, switches, firewalls) as Virtual Machines (VMs) on top of a hypervisor — such as VMware ESXi, KVM, or Hyper-V. These VMs emulate hardware, allowing you to deploy multiple network devices on a single physical server.

Popular for:

- Labs and simulation environments

- Cloud-native and software-defined networks

- Dynamic resource scaling

What Is Bare Metal Deployment?

Bare Metal Deployment means installing your network OS (like Cisco NX-OS, JunOS, or FortiOS) directly on the hardware. There is no virtualization layer — just pure metal and software.

Ideal for:

- High-performance environments

- Dedicated appliances

- Carrier-grade services

Key Difference

At its core, the decision is between flexibility (Hypervisor) and raw performance (Bare Metal). Virtualization offers scale and automation, while bare metal guarantees consistent throughput and latency.

Think of It Like This

Imagine running your favorite game on a gaming console (bare metal) vs emulating it on your PC using software (hypervisor). The game runs either way, but performance and flexibility vary.

Hypervisor vs Bare Metal

| Feature | Hypervisor-Based Deployment | Bare Metal Deployment |

|---|---|---|

| Deployment Layer | On top of hypervisor (KVM/ESXi) | Directly on hardware |

| Performance | Slightly lower due to virtualization | Maximum hardware performance |

| Flexibility | High – Easily deploy/clone/backup VMs | Low – Manual installation/config |

| Scalability | Horizontal scaling possible | Limited to physical box |

| Use Cases | Labs, cloud, multi-tenancy, NFV | Production, ISP backbone, appliances |

| Downtime Handling | Easy snapshot/restore | Manual recovery |

| Licensing/Cost | Often cheaper (open-source options) | Higher hardware and support cost |

| Automation/DevOps Friendly | Highly compatible | Challenging |

Pros and Cons

| Type | Pros | Cons |

|---|---|---|

| Hypervisor | – Easy to deploy and scale – Ideal for multi-tenant use – Snapshot/backups supported – Hardware resource sharing | – Lower raw performance – Dependent on host OS – Complex nested virtualization |

| Bare Metal | – Dedicated performance – More stable under load – Vendor-level support | – Costly per appliance – No fast recovery – Difficult to automate at scale |

Common for Hypervisor or Bare Metal

| Task | CLI Command (Cisco/VMWare/Linux) | Notes |

|---|---|---|

| Check network interfaces | show ip interface brief (Cisco) / ip addr (Linux) | Verifies interface IPs |

| View routing table | show ip route / netstat -rn | Validates connectivity |

| Check virtual NIC status | esxcli network nic list (VMware) | For hypervisor NIC state |

| Monitor CPU/Memory on VM | top / esxtop | Resource utilization tracking |

| Test latency | ping <IP> / traceroute | Basic network tests |

| Show running config | show running-config / cat /etc/network/interfaces | Used in both models |

| Enable/Disable NIC | ifconfig eth0 up/down (Linux) | Network troubleshooting |

| View VM status (Hypervisor) | virsh list --all / esxcli vm process list | For KVM and VMware |

Real-World Use Cases

| Environment/Scenario | Hypervisor-Based | Bare Metal |

|---|---|---|

| Network Lab (CCNA/CCNP/CCIE) | EVE-NG, GNS3, VirtualBox | Not practical |

| Enterprise Core Routers | Only in SD-WAN/SDN models | Ideal for full-scale production |

| Cloud Firewalls | vFWs in AWS/Azure/ESXi | Rare, unless on dedicated hosting |

| ISP Edge Deployment | Hybrid possible with NFV | Preferred for throughput |

| Disaster Recovery | VM snapshot and restore | Manual failover |

| Low-Latency Trading Networks | Not recommended | Bare metal ensures lowest latency |

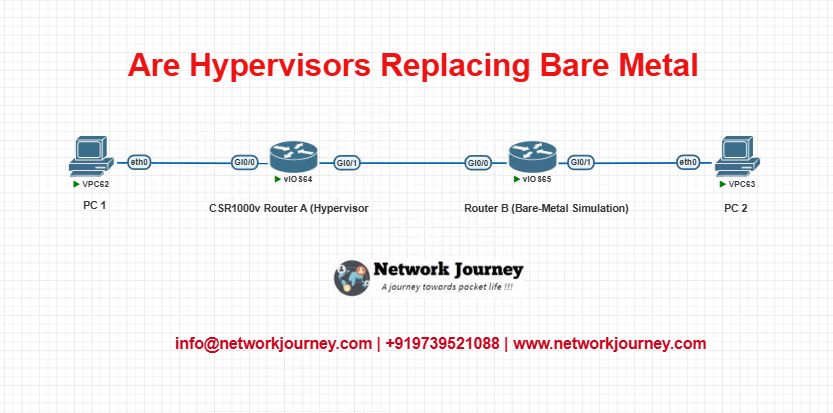

EVE-NG Lab Setup: Hypervisor vs Bare Metal Simulation

We’ll simulate both deployment types in a small lab. While true bare metal can’t be done inside EVE-NG, we can emulate the experience by disabling certain virtual features.

Lab Topology

- Router A: CSR1000v on EVE-NG (acts as a hypervisor-based VNF)

- Router B: VyOS with limited NICs and dedicated CPU (emulates bare metal)

- Test routing and ping between PC1 and PC2

Sample Configuration

On Router A (CSR1000v):

interface Gig0

ip address 192.168.1.1 255.255.255.0

no shut

interface Gig1

ip address 10.1.1.1 255.255.255.0

no shut

ip route 192.168.2.0 255.255.255.0 10.1.1.2

On Router B (VyOS):

set interfaces ethernet eth0 address 10.1.1.2/24

set interfaces ethernet eth1 address 192.168.2.1/24

set protocols static route 192.168.1.0/24 next-hop 10.1.1.1

commit; save

On PC1 and PC2:

- Set default gateway to respective routers

- Ping across the path to verify both hypervisor-based and “bare-metal” VMs

Troubleshooting Tips

| Issue | Likely Cause | Suggested Fix |

|---|---|---|

| High latency in virtual router | Resource overcommit on hypervisor | Pin CPUs to VM or reduce load |

| Interface down in bare-metal | Driver or cable issue | Verify NIC status and cabling |

| VM not connecting to network | vNIC not mapped to correct bridge/VLAN | Use esxcli network vswitch standard portgroup |

| Routing not working between nodes | Static route missing | Check route table and config |

| CPU spikes on hypervisor | Multiple VNFs competing for resources | Allocate more CPU/RAM or migrate VMs |

Frequently Asked Questions (FAQs)

1. Which is better: hypervisor or bare metal for networking?

Answer:

It depends entirely on the use case. Hypervisors (like VMware ESXi, KVM, or Hyper-V) are excellent for labs, training, testing, and scalable environments where flexibility and rapid provisioning are priorities. They allow multiple virtual network devices to run on a single physical host, reducing hardware costs and increasing agility. On the other hand, bare-metal deployments provide direct access to hardware, resulting in lower latency, higher throughput, and more consistent performance, making them ideal for production-grade environments, particularly those requiring real-time processing or high I/O workloads.

2. Can I run Cisco IOS on a hypervisor?

Answer:

Yes, Cisco offers several virtualized router images designed specifically to run on hypervisors. Examples include Cisco IOSv, CSR1000v, and IOS-XRv, all of which can be deployed on virtualization platforms like EVE-NG, GNS3, VMware ESXi, or even VirtualBox. These virtual devices support most of the routing and switching features needed for learning and lab environments and are widely used for CCNA, CCNP, and CCIE preparations, as well as proof-of-concept (PoC) testing.

3. Is EVE-NG considered bare metal?

Answer:

No, EVE-NG is not bare metal. It is a virtualized network emulator that itself runs on top of a hypervisor such as VMware ESXi, Workstation, or KVM. While it doesn’t run directly on hardware, you can optimize its performance to simulate near bare-metal behavior by dedicating CPU cores, reserving RAM, and limiting resource contention among virtual machines. However, it still remains a nested virtualized solution and not a direct substitute for true bare-metal deployments.

4. Why choose bare metal in a cloud-first world?

Answer:

Despite the popularity of cloud and virtualized environments, bare-metal servers still offer unmatched performance in certain scenarios. Applications that require ultra-low latency, hardware-level encryption (like Intel AES-NI), or real-time processing (such as high-frequency trading, deep packet inspection, or certain security functions) benefit from the predictable performance and direct hardware access that bare metal provides. It also avoids the “noisy neighbor” issue common in shared virtual environments.

5. Can I migrate from bare metal to hypervisor?

Answer:

Yes, most infrastructure vendors and hypervisor platforms support P2V (Physical to Virtual) migrations. This involves converting a bare-metal machine into a virtual machine with all its configurations and data intact. Tools like VMware Converter, Microsoft Virtual Machine Converter, or third-party backup & recovery solutions can perform these migrations efficiently. This helps organizations transition legacy systems into more manageable and flexible virtual environments.

6. Is licensing different between the two?

Answer:

Yes, licensing models vary. In bare-metal setups, you typically license based on hardware units, such as CPU sockets or cores. In hypervisor-based environments, the licensing can be more flexible — often allowing pay-per-use, instance-based, or subscription-based models. However, keep in mind that running software in a virtual environment may require separate virtual appliance licenses, support contracts, or feature unlocks depending on the vendor.

7. What is nested virtualization?

Answer:

Nested virtualization is the practice of running a hypervisor inside a virtual machine, which itself is running on another hypervisor. It is especially useful for training labs, testing new hypervisor features, or simulating multi-layered cloud environments. While powerful for simulation purposes, it is generally not recommended for production due to performance overhead and potential instability. Only newer CPUs and hypervisors support nested virtualization efficiently.

8. Which one is better for CCIE practice?

Answer:

A hypervisor-based environment is better suited for CCIE or any Cisco certification practice. Platforms like EVE-NG or GNS3 allow you to run multiple Cisco routers and switches virtually using images like IOSv, NX-OSv, or CSR1000v on a single machine. This setup saves hardware cost, offers flexibility, and allows for building complex topologies that are required in CCIE labs. Bare metal would require multiple physical devices, which is costly and less flexible for learning purposes.

9. What about failover and redundancy?

Answer:

Hypervisors typically support advanced HA (High Availability) features such as snapshots, live migration, automatic failover, and backup/restore, making them resilient and easy to recover. For example, VMware HA or vMotion allows VMs to be moved seamlessly between hosts with minimal downtime. On bare-metal systems, redundancy needs to be configured manually using techniques like clustering, RAID, or external failover mechanisms, which can be more complex and less flexible.

10. Is it possible to mix both models?

Answer:

Absolutely. Most modern enterprise networks are hybrid, using both bare-metal and hypervisor-based models depending on workload requirements. Critical services like firewalls, core routers, or database servers may run on bare metal for performance and reliability, while dynamic services, test environments, or cloud-native apps run on hypervisors or containers for flexibility and scalability. This hybrid approach provides the best of both worlds in terms of performance, cost-efficiency, and manageability.

Watch the Full Video

Watch the Complete CCNP Enterprise: Are Hypervisors Replacing Bare Metal in 2025? Here’s What You Should Know Lab Demo & Explanation on our channel:

Final Note

Understanding how to differentiate and implement Are Hypervisors Replacing Bare Metal in 2025? Here’s What You Should Know is critical for anyone pursuing CCNP Enterprise (ENCOR) certification or working in enterprise network roles. Use this guide in your practice labs, real-world projects, and interviews to show a solid grasp of architectural planning and CLI-level configuration skills.

If you found this article helpful and want to take your skills to the next level, I invite you to join my Instructor-Led Weekend Batch for:

CCNP Enterprise to CCIE Enterprise – Covering ENCOR, ENARSI, SD-WAN, and more!

Get hands-on labs, real-world projects, and industry-grade training that strengthens your Routing & Switching foundations while preparing you for advanced certifications and job roles.

Email: info@networkjourney.com

WhatsApp / Call: +91 97395 21088

Upskill now and future-proof your networking career!

![Are Hypervisors Replacing Bare Metal in 2025? Here’s What You Should Know. [CCNP ENTERPRISE]](https://networkjourney.com/wp-content/uploads/2025/06/Are-Hypervisors-Replacing-Bare-Metal-in-2025_networkjourney.png)

![MAC Address Table Lookup Deep Dive: Mastering Switch-Level Visibility [CCNP Enterprise]](https://networkjourney.com/wp-content/uploads/2025/06/MAC-Address-Table-Lookup-Deep-Dive_networkjourney.png)